People do not trust the AI. How to fix it?

Source:

Source:Artificial intelligence is already able to predict the future. The police use it to produce maps reflecting when and where there can be a crime. Doctors use it to predict when a patient may have a stroke or a heart attack. Scientists are even trying to give AI's imagination, so he can anticipate the unexpected events.

Many decisions in our lives require good forecasts, and agents AI almost always do better with them than people. However, all these technological advances we still lack confidence in the forecasts, which gives artificial intelligence. People are not used to rely on AI and prefer to trust the experts in people's faces, even if these experts are wrong.

If we want artificial intelligence beneficial to the people, we need to learn to trust him. For this we need to understand why people are so persistently refuse to trust the AI.

theTrust the Dr. Robot

An Attempt by IBM to provide supercomputing program oncologists (Watson for Oncology) has been sorely missed. AI promised to provide high-quality advice on the treatment of 12 types of cancer, which accounted for 80% of cases in the world. To date, more than 14 000 patients received recommendations on the basis of his calculations.

But when the doctors first encounter with Watson, they found themselves in a rather difficult situation. On the one hand, if Watson gave instructions regarding the treatment, coinciding with their own views, doctors have not seen great value in the recommendations of the AI. The supercomputer was just telling them what they already knew, and these recommendations do not change the actual treatment. This might give doctors peace of mind and confidence in their own decisions. But IBM has not shown that Watson does increase the survival rate of cancer.

On the other hand, if Watson has made recommendations that differed from the experts, the doctors concluded that Watson is incompetent. And the machine could not explain why the treatment should work, because its machine learning algorithms were too complicated to be able to understand people. Accordingly, this led to even greater distrust, and many doctors simply ignored the recommendations of the AI, relying on their own experience.

As a result of main medical partner of IBM Watson — MD Anderson Cancer Center recently reported the waiver program. Danish hospital also reported that abandons the program after it found that oncologists do not agree with Watson in two cases out of three.

The Problem of oncological Watson was that the doctors he just didn't trust. The trust of the people often depends on our understanding of how other people think, and experience strengthens confidence in their opinion. It creates a psychological sense of security. AI, on the other hand, a relatively new and confusing thing for people. He makes decisions based on complex system analysis to identify potential hidden patterns and weak signals arising from large amounts of data.

Even if it is possible to explain the technical language, the decision-making process of the AI is usually too complex to be understood of most people. Interaction with something that we don't understand can cause anxiety and create a sense of loss of control. Many people simply don't understand how AI works, because it's happening somewhere behind the screen, in the background.

For this reason, they are sharper noticed cases when the AI makes a mistake: remember the Google algorithm, which klassificeret colored people as gorillas; chatbot Microsoft, which became a Nazi in less than a day; Tesla working on autopilot, which had an accident with a fatal outcome. These bad examples have received a disproportionate attention of the mass media, emphasizing the agenda that we can't rely on technology. Machine learning is not 100% reliable, partly because of his designing people.

thethe division of society?

The sensation of artificial intelligence, go deep into the nature of human beings. Recently, scientists conducted an experiment in which interviewed people who watched movies about artificial intelligence (fantastic), on the topic of automation in everyday life. It turned out that no matter was the AI in the film is depicted in a positive or negative way, merely watching a cinematic representation of our technological future polarizes the attitude of the participants. Optimists become even more optimistic, but the skeptics are closed even stronger.

This suggests that people are prejudiced against AI, starting from their own reasoning, is deeply rooted tendency of biased confirmation: the tendency to seek or interpret information in such a manner to confirm pre-existing concept. Because AI is often mentioned in the media, it can promote deeply divided society, split between those who use AI, and those who rejected it. The predominant group of people can gain a serious advantage or handicap.

theThree ways out of the crisis of confidence AI

Fortunately, we have thoughts on how to deal with the credibility of AI. One only have experience with AI can significantly improve the attitude of people towards this technology. There is also evidence indicating that the more often you use certain technologies (e.g. Internet), the more you trust them.

Another solution would be to open the "black box" machine learning algorithms and to make their work more transparent. Companies like Google, Airbnb and Twitter, already publish transparency reports on government requests and disclosure. This practice in AI systems will help people get the right understanding of how algorithms make the decisions.

Studies show that the involvement of people in decision-making process of the AI will increase confidence and allow the AI to study human experience. The study showed that people who were given the opportunity to slightly modify the algorithm, felt great satisfaction from the results of his work, apparently because of feelings of superiority and influence on the future outcome.

We don't have to understand the complex inner workings of AI systems, but if you give people at least a little information and control over how these systems are implemented, they will have more confidence and desire to make the AI in everyday life.

...Recommended

The Oculus zuest 2 virtual reality helmet for $300. What's he capable of?

Why is the new Oculus zuest 2 better than the old model? Let's work it out together. About a decade ago, major technology manufacturers introduced the first virtual reality helmets that were available to ordinary users. There were two ways to find yo...

The mysteries of neurotechnology - can the brain be used as a weapon?

DARPA has launched the development of a neural engineering system to research a technology that can turn soldiers into cyborgs Despite the fact that the first representatives of the species Homo Sapiens appeared on Earth about 300,000 - 200,000 years...

What materials can be used to build houses on Mars?

Marsha constructions n the surface of the Red Planet SpaceX CEO Elon Musk is hopeful that humans will go to Mars in the next ten years. Adapted for long flight ship Starship is already in development, but scientists have not yet decided where exactly...

Related News

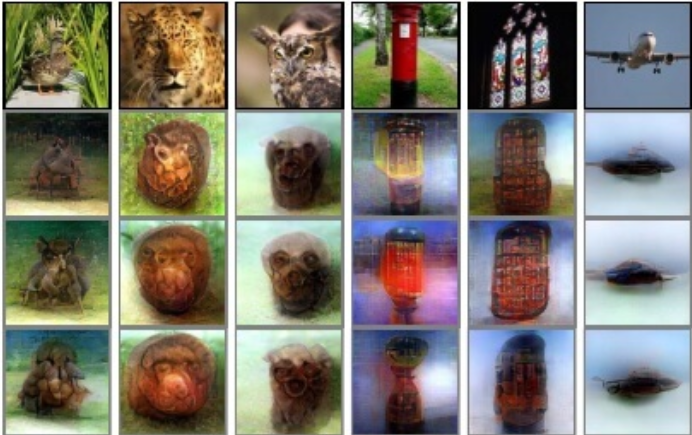

Japanese neural network is taught to "read minds"

it Seems that computers can already read our minds. The Google autocomplete suggested friends in Facebook and targeted advertising that POPs up in your browser, sometimes make you think: how does it work? We are slowly but surely ...

Every Monday in the new issue of «News high-tech» we summarize the previous week, talking about some of the most important events, the key discoveries and inventions. This time we will focus on the failed launch of Space...

#2018 CES | Samsung showed a prototype of bendable smartphone

at the close of the exhibition CES-2018, traditionally held in Las Vegas, the South Korean company Samsung has decided to do a very narrow number of invited participants covered by the cameras flashing, the prototype demonstration...

French designers have developed a airplane bird

As you know, aircraft use wing design, "borrowed" from the birds. However, there is a "live" wing one advantage, which allows better control of the flight: wing are able to deform. But soon the aircraft can acquire such a useful f...

#CES 2018 | Submitted street lamp spy

this year's CES has given us a lot of very interesting announcements from various spheres of science and high technologies. One of the intriguing new startup called Wi-Fiber, which is a "smart" modular led street light. The flashl...

China will use face detection for registration of marriage

Now facial recognition is a technology that is primarily used to locate dangerous criminals and inform the authorities. But soon in China, it can find more "civilian" applications: during the marriage ceremony. Although in this si...

Tesla began installing solar roof tiles in homes of clients

Tesla started installing roofs of the first customers from among the customers. Installation, first worked on the homes of employees of the company. Square meter of the roof from Tesla and SolarCity costing customers $ 220, which ...

Proposed construction of a giant wind farm in the North sea

Most of the world's offshore wind power plants, usually located near the coast of the countries which they feed. However, the ambitious plan for a Dutch energy company offers to create the world's largest wind farm, and an adapted...

Telegram will carry out the ICO and will launch its own cryptocurrency chataway

a Platform for the exchange of encrypted messages Telegram, which is operated by Vkontakte founder Pavel Durov, plans to launch its own blockchain-based platform and cryptocurrency, adding the option to make payments in your appli...

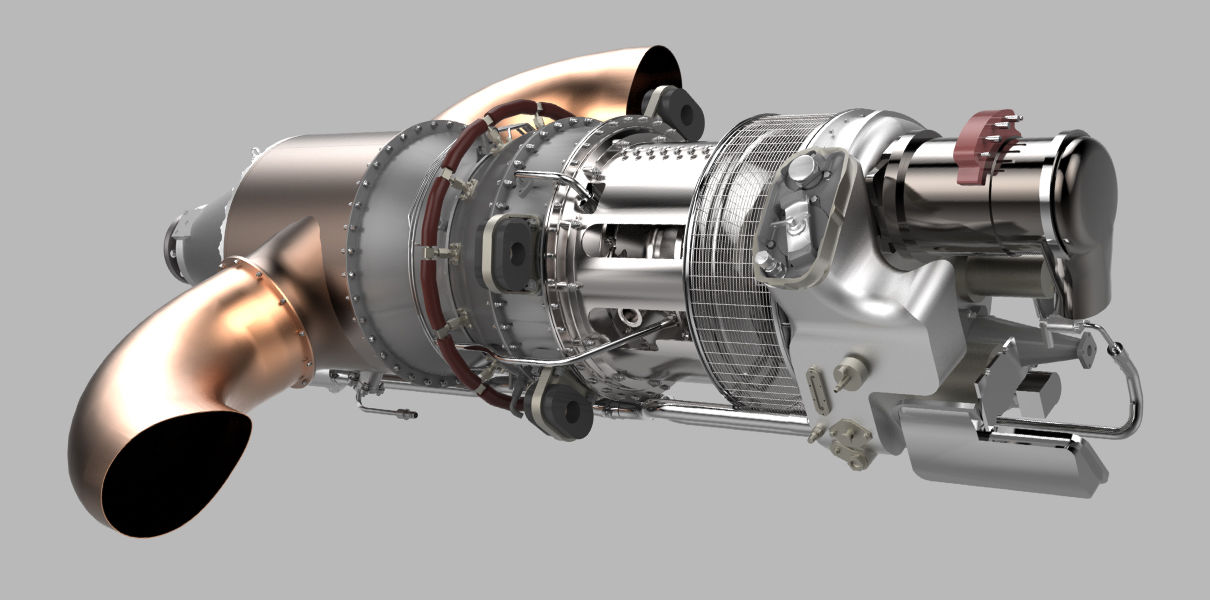

General Electric has printed and tested a turboprop engine

a Division of General Electric Aviation conducted the full test of the turboprop engine printed on a 3D printer. Thanks to modern technology, time to develop a new engine has been reduced from ten to two years, and the number of p...

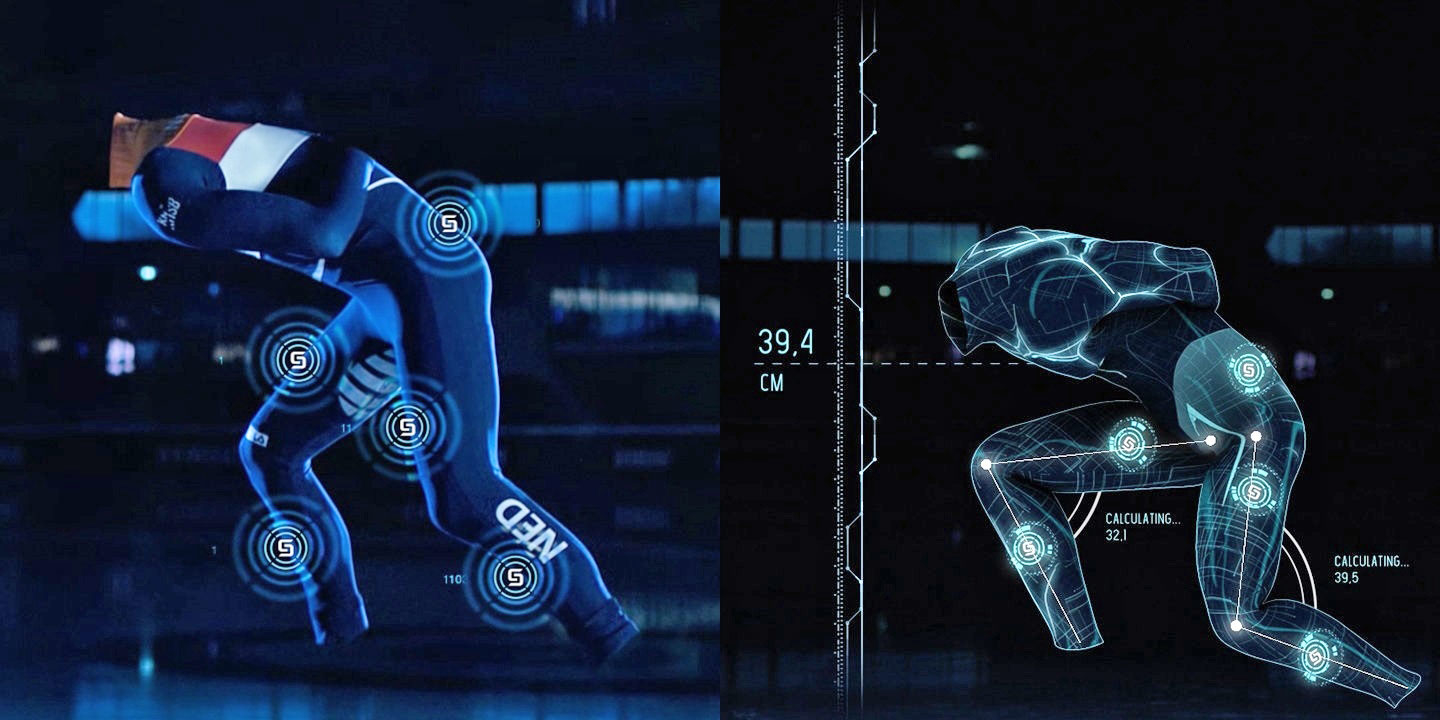

Samsung smart suits help the athletes prepare for the Olympic games

the South Korean company has always been very proud that is one of the largest sponsors of the Olympic games. Winter games in 2018 in Pyeongchang will not be for the company's exception. Only now Samsung is the sponsor of this eve...

Panasonic has introduced a unique exoskeleton

Exoskeletons have long ceased to be the lot of science fiction movies and video games, and many companies are already developing a variety of models of such devices. But if most of the developments used in military training or for...

The world's largest amphibious aircraft made its first flight

the world's largest seaplane – Chinese — made its first test flight from the airport of Zhuhai, located in Guangdong province, according to the publication Popular Mechanics. With the size of the civilian airliner, AG600 abl...

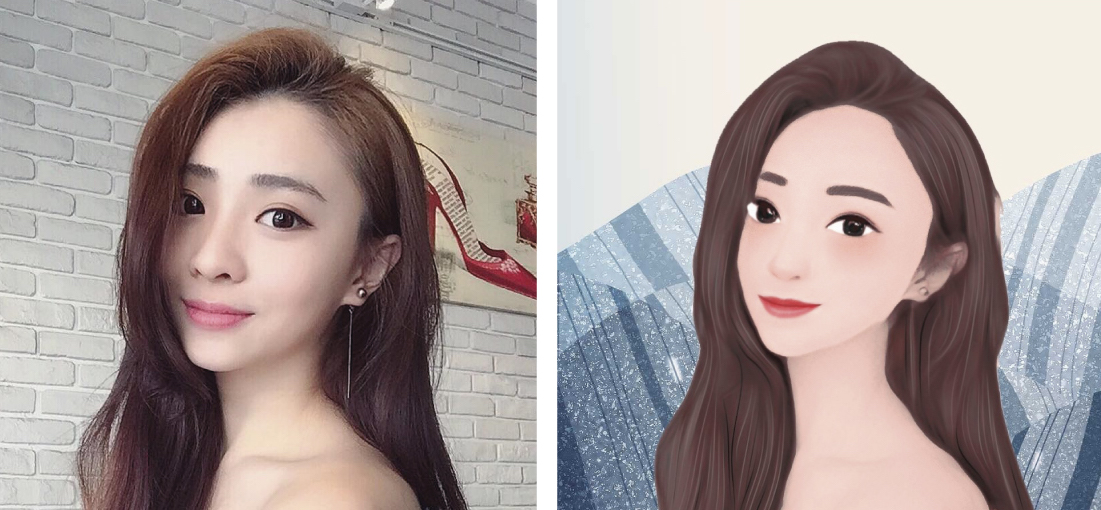

In the application Meitu appeared robot artist, designed with the help of AI

Few who have not heard about , which is using the latest technology to improve the user images. This time the creators of the app decided to go ahead and have built in Meitu a trained robot artist, designed using artificial intell...

How are things with LeEco? Spoiler: not really

the Attempt of the Chinese company LeEco to make a forced March to the American market, and suddenly his win was more unsuccessful than expected. Thrown into this venture, the money burned a big hole in the pocket of the company t...

Musk has promised to release a Tesla pickup truck

the Irrepressible Elon Musk doesn't want to stay in his quest to revolutionize the car market. Not much time has passed since the presentation of the Tesla electric truck and the second generation of the Roadster, as the legendary...

Baidu will be built in China AI-city

Sometimes it seems that the fantastic city of the future, based on the use of high technology will never be created and will remain dreams, because in order to "modify" the modern city requires huge resources! However, the Chinese...

Every Monday in the new issue of «News high-tech» we summarize the previous week, talking about some of the most important events, the key discoveries and inventions. This time we will focus on One Hyperloop, a supersoni...

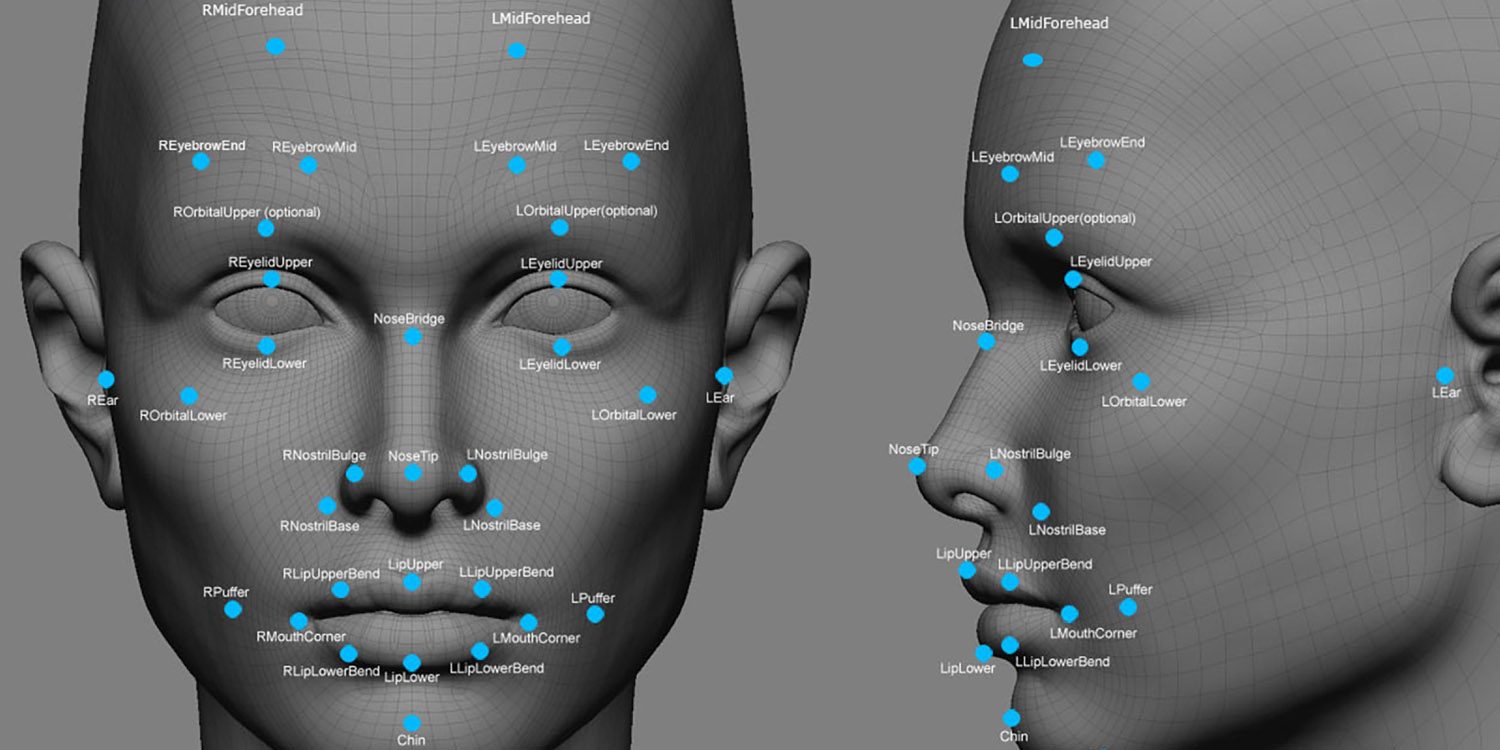

Face recognition: how it works and that it will continue?

You climb the stairs and enter the Elevator. He knows what floor you need. The door opening in front of you. A computer and a phone "know" you and not require a password. Cars, social networks, shops greet you on sight, calling yo...

Scientists have created a neural network with psychic abilities

One of Clarke's laws States: any sufficiently advanced technology is indistinguishable from magic. And if a few centuries ago, the most accurate way to know the future was to go to the shaman or the fortune teller that nothing but...

Comments (0)

This article has no comment, be the first!