Why ethical problem — the most serious for artificial intelligence

Source:

Source:Artificial intelligence is already everywhere and will remain everywhere. Many aspects of our lives in one degree or another relate to artificial intelligence: he decides what books to buy, what tickets for the flight order of how successful the submitted resume, will provide you the Bank loan and what is a cure for cancer to give the patient. Many of its use on our side of the ocean has not yet come but will come.

All of these things — and many others — are now able largely to define complex software systems. The huge success of AI over the past few years is astonishing: the AI makes our lives better in many, many cases. The rise of artificial intelligence was inevitable. Huge sums have been invested in startups on the topic of AI. Many existing tech companies — including giants such as Amazon, Facebook and Microsoft opened a new research laboratory. It is no exaggeration to say that the software now means AI.

Some predict that the advent of AI will be the same big event (or even more) as the advent of the Internet. BBC interviewed experts that prepares this rapidly changing world filled with shiny machines, we humans. What is especially interesting, almost all their answers were devoted to ethical issues.

Peter Norvig, Director of research at Google and a pioneer of machine learning, believes that technology is AI, based on the data, raises a particularly important issue: how to ensure that these new systems have improved society as a whole and not just those who manage them. "Artificial intelligence has proved very effective in practical tasks, from labeling pictures to understand speech and written natural language, detection of diseases," he says. "The challenge now is to ensure that everyone enjoys this technology."

The Big problem is that software complexity often means that it is impossible to determine exactly why the AI does what it does. Due to the fact, how the modern AI is based on the widely successful technique of machine learning — it's just impossible to open the hood and see how it works. So we just have to trust him. The challenge was to come up with new ways of monitoring or auditing the many areas in which AI plays a big role.

Jonathan Zittrain, Professor of Internet law at the faculty of Harvard, believes that there is a danger that the increasing complexity of computer systems may prevent us from providing an adequate level of inspection and control. "I am concerned about the reduction of the individual's autonomy as our systems — using technologies becoming more complex and intertwined," he says. "If we "set and forget", we may regret, how to develop the system and what is not considered ethical aspect in the past."

This concern is echoed by other experts. "How are we going to certify these systems as safe?", asks Missy Cummings, Director of the Laboratory of human and autonomy at Duke University in North Carolina, one of the first female pilots of the US Navy, now an expert on drones.

The AI will need supervision, but it is unclear how to do it. "Currently, we have no standard approaches," says Cummings. "And no industry standard testing such systems will be difficult to widely implement these technologies."

But in a rapidly changing world and often the settlement be in the position of catching up. In many important areas like criminal justice and health care companies are already enjoying the efficiency of artificial intelligence that takes the decision to parole or diagnosis of a disease. Transferring the right decisions to machines, we run the risk of losing control — who will check the correctness of the system in each case?

Dana Boyd, senior researcher at Microsoft Research, says that there remain serious questions about the values that are recorded in these systems and who is ultimately responsible for them. "Regulators, civil society and social theorists increasingly want to see these technologies in a fair and ethical but their concept at best vague".

One of the areas fraught with ethical problems, it jobs, General job market. AI allows robots to perform more complicated work and replacing a large number of people. Chinese Foxconn Technology Group, the supplier of Apple and Samsung, has announced its intention to replace the 60 000 workers of the factory by robots and the factory of Ford in Cologne, Germany, put robots next to people.

Moreover, if the rise of automation will have a big impact on employment, this can have negative consequences for the mental health of people. "If you think about what gives people meaning in life is three things: meaningful relationships, love interests and significant work," says Ezekiel Emanuel, a bioethicist and a former adviser on health care Barack Obama. "Meaningful work — a very important element of someone's identity." He says that in the regions where the works were lost with the closure of factories, and increased risk of suicide, substance abuse and depression.

As a result, we see the need for a large number of experts on ethics. "The company will follow its market incentives — it's not bad, but we can't rely on the fact that they will behave ethically just because", says Kate darling, a specialist in law and ethics at the Massachusetts Institute of technology. "We saw that whenever there was a new technology and we were trying to decide what to do with it".

Darling notes that many companies with large names such as Google, have already established supervising ethics committees, which control the development and deployment of their AI. It is believed that they should be more common. "We don't want to stifle innovation, but such structures we need," she says.

Details about who sits in the Board of ethics Google and what does remain vague. But last September, Facebook, Google and Amazon launched a consortium to develop solutions which will allow to cope with the deep traps related to the security and secrecy of the AI. OpenAI — too organization, which is engaged in the development and promotion of AI open source for the benefit of all. "It is important that machine learning has been studied openly and has spread through open publications and open source code that we all could participate in a sharing of rewards," says Norvig.

If we want to develop industry and ethical standards and to clearly realize what is at stake, it is important to create a brainstorm with experts on ethics, technology leaders and corporations. We are talking about not simply to replace people with robots, and to help people.

...Recommended

The Oculus zuest 2 virtual reality helmet for $300. What's he capable of?

Why is the new Oculus zuest 2 better than the old model? Let's work it out together. About a decade ago, major technology manufacturers introduced the first virtual reality helmets that were available to ordinary users. There were two ways to find yo...

The mysteries of neurotechnology - can the brain be used as a weapon?

DARPA has launched the development of a neural engineering system to research a technology that can turn soldiers into cyborgs Despite the fact that the first representatives of the species Homo Sapiens appeared on Earth about 300,000 - 200,000 years...

What materials can be used to build houses on Mars?

Marsha constructions n the surface of the Red Planet SpaceX CEO Elon Musk is hopeful that humans will go to Mars in the next ten years. Adapted for long flight ship Starship is already in development, but scientists have not yet decided where exactly...

Related News

China has built the world's largest experimental 5G network

As reported by the news Agency «Xinhua», in the capital of China Beijing, Huairou district, built the world's largest experimental network of mobile technology standard 5G. In the work on the establishment of the network...

The company PassivDom started printing completely Autonomous house on a 3D printer

at Home that print in a Ukrainian company obtained a fully Autonomous and «smart», so for life, you can choose any place. The startup says that the company produces Autonomous self-learning modular homes are created with...

the Company Hyperloop Transportation Technologies has previously consulted with the state commissions of the Czech Republic, Slovakia and the United Arab Emirates and discussed the construction of a new transport system on the ter...

Virtual assistant "Google AI Duet" will allow you to become a good pianist

the Developers of artificial intelligence Google are seeking increasingly sophisticated ways of using AI in various fields of science and not only. For example, the Duet presents the Google AI does not invent music and not play so...

In 2021 China will start selling the world's largest amphibian

the First flight test of the Chinese amphibian aircraft to begin in the summer of 2017, but before he first goes up, it should be tested. At the moment the aircraft has passed almost every test, so engineers are already preparing ...

Artificial intelligence will be taught to speed read

Perhaps one of the main difficulties associated with how to do machine learning is really effective, is that you often have to teach the machine to thousands or even millions of examples. But what if you don't have to reserve week...

The project Vertical Forest helps to build "green" skyscrapers

In some cities of China in the near future will be built a few skyscrapers, covered with greenery. Inside are the usual offices, restaurants, shopping malls, and other attributes of conventional buildings. But on the outside they ...

Elon Musk: if people don't want to one day become useless, they should become cyborgs

Humanity is in danger of neglect under the power of artificial intelligence, and he just needs to develop the possibilities of communication with the machines directly or be ready to face the risk of becoming useless, he said in h...

Created reusable paper which can be printed with the light

For the production of paper are used annually tens of tons of wood, and it's acres «the lungs of our planet». Therefore, the reduction of the use of wood is extremely important for our existence. Recycling only partially...

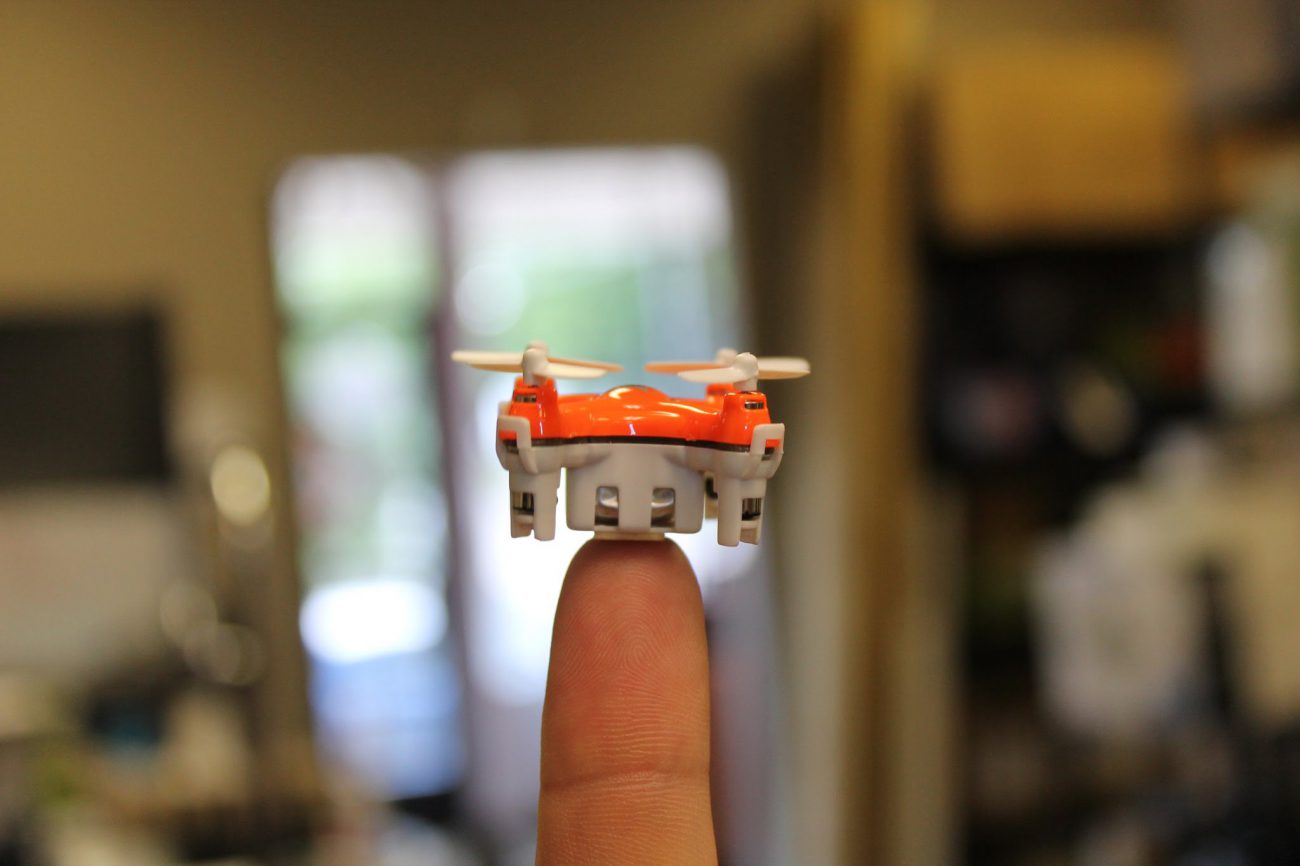

Robots vs insects: why tiny drones are inferior to bees?

Perhaps the last item on the list of things that can forever change thanks to the drones, will not be delivery of goods or the provision of Internet coverage, but a very valuable service... pollination. Scientists from Japan are s...

Developed technology to create high-quality holographic displays

create a holographic display that would be qualitative and accessible to everyone — it is very distant future. But development in this area is ongoing. And recently researchers from the Korean Institute of science and advanc...

More "hyperloop", good and different!

a Former engineer Hyperloop One, resigned from the company last summer, did not sit idly by, and instead founded his own company «Arrival» in which, too, will engage in the construction of hypersonic transport system sim...

British rail pump with high technology

Not only flying into space, mankind lives, we should not forget about the more mundane types of movement. For example, Railways. The management of the Association of train companies, Rail Delivery Group the UK has decided that it ...

Ruselectronics, has developed optical discs based on organic materials

Despite the onset of the digital age and cloud storage information «traditional» optical media, hard drives and other memory elements will not soon be consigned to history. At least in large corporations and government a...

Artificial intelligence Google learns to improve the quality of the images

Remember how in the movie "blade Runner" Rick Deckard, sipping brandy from a massive glass voice commands has increased and reduced the different zones of the image on the screen of your computer in search of evidence? The picture...

Artificial intelligence tries to work as a psychologist

Over the past few years artificial intelligence has tried to teach, it seems, almost everything from complex calculations to a poker game. But Japanese researchers from NTT Resonant company focused their research in several differ...

China has become the largest producer of solar energy in the world

what the Chinese are good at, it's utiranie nose to other States in such fields as industry, construction or even high technologies like genetics. So this time China once again proved that she is capable of much, becoming the larg...

Icelandic architects proposed to create in Reykjavik oases on geothermal energy

Iceland — incredibly beautiful, but pretty cold place. Even in summer there is not much sunbathing, but really want to! Therefore, the architectural company Spor Sandinn i decided to take up gardening Reykjavik, build in the...

The court between the companies ZeniMax and Oculus ended up being pretty unusual

We have already seen that the game publisher ZeniMax (owner of Bethesda studios, id Software, Arcane Studios, etc) filed a lawsuit against the management of the company Oculus, owned by Facebook Corporation. The plaintiff accused ...

Created a tiny device for reproducing holographic images

a Different fantastic films suggests that in the future we will certainly be waiting for the mass consumer devices for projection of holographic images. It can be used for advertising purposes, and in the creation of advanced vers...

Comments (0)

This article has no comment, be the first!