Japanese neural network is taught to "read minds"

Source:

Source:It Seems that computers can already read our minds. The Google autocomplete suggested friends in Facebook and targeted advertising that POPs up in your browser, sometimes make you think: how does it work? We are slowly but surely moving in the direction of computers that read our minds, and a new study from Kyoto, Japan, was clearly a step in that direction.

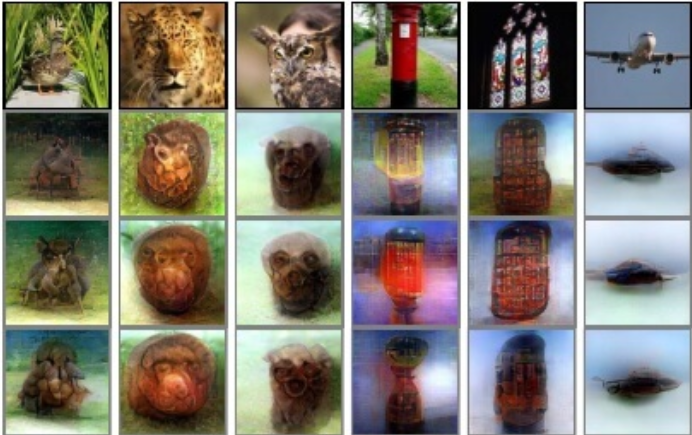

A Team of scientists from Kyoto University used a deep neural network to read and interpret people's thoughts. Sounds unbelievable? In fact, such do not for the first time. The difference is that previous methods — and results — were easier, they had deconstructed the image-based and pixel, and fundamental circuits. New technology, called "deep image reconstruction", is outside of the binary pixels and provides researchers with the ability to decode image with multiple layers of color and texture.

"Our brain processes visual information by extracting hierarchical traits of different levels or components of different complexity," says Yukiyasu Kamitani, one of the scientists involved in the study. "These neural networks or model AI can be used as a proxy for the hierarchical structure of the human brain".

The Study took place 10 months. In the study, three people studied images of three different categories: natural phenomena (animals or people), artificial geometric shapes and letters of the alphabet.

Brain Activity was measured by observers either while viewing the images, or after. To measure brain activity after viewing images, people are simply asked to think about the images that they were shown.

The Recorded activity is then fed to the neural network, which is "decrypted" data and used them to generate their own interpretations of the thoughts of people.

In humans (and, indeed, all mammals) visual cortex located at the back of the brain, the occipital lobe, which is above the cerebellum. Activity in visual cortex measured using functional magnetic resonance imaging (fMRI), which was transformed into the hierarchical features with deep neural networks.

Starting with a random image, the network is iteratively optimizes the pixel values of this image. Features of introduction to neural network image become similar to the features decoded from brain activity.

Importantly, the model scientists were trained using only natural images (people or nature), but I learned to remodel a man-made form. This means that the model is really "generated" image, starting from brain activity, and did not compare this activity with the existing examples.

Unsurprisingly, the model had difficulty with decoding the brain activity when people were asked to remember the image, and it is easier when they are directly viewed these images. Our brains can't remember all the details seen images, so the memories will always be vague.

The Reconstructed images from the study retain some resemblance to the original images seen by the participants, but for the most part appear to be as minimally detailed blots. However, the accuracy of the technology will only improve, and with it will expand and possible applications.

Imagine the "instant art", when you can create a work of art, just presenting it in my head. Or if the AI will record your brain activity while you sleep, and then re-create your dreams for analysis? Last year completely paralyzed patients to communicate with their families through brain-computer interface.

There are a lot of possible applications of the model used in the Kyoto study. But brain-computer interfaces can also recreate the horrific images, if we learn to properly interpret brain activity. The cost of mistakes in case of wrong reading the thoughts may be too high.

With all this Japanese company is not alone in its efforts to create a mind-reading AI. Elon Musk founded Neuralink to create brain-computer interface between people and computers. Kernel is working on creating chips that can read and write the neural code. Mind reading is slowly developing.

Recommended

The Oculus zuest 2 virtual reality helmet for $300. What's he capable of?

Why is the new Oculus zuest 2 better than the old model? Let's work it out together. About a decade ago, major technology manufacturers introduced the first virtual reality helmets that were available to ordinary users. There were two ways to find yo...

The mysteries of neurotechnology - can the brain be used as a weapon?

DARPA has launched the development of a neural engineering system to research a technology that can turn soldiers into cyborgs Despite the fact that the first representatives of the species Homo Sapiens appeared on Earth about 300,000 - 200,000 years...

What materials can be used to build houses on Mars?

Marsha constructions n the surface of the Red Planet SpaceX CEO Elon Musk is hopeful that humans will go to Mars in the next ten years. Adapted for long flight ship Starship is already in development, but scientists have not yet decided where exactly...

Related News

Every Monday in the new issue of «News high-tech» we summarize the previous week, talking about some of the most important events, the key discoveries and inventions. This time we will focus on the failed launch of Space...

#2018 CES | Samsung showed a prototype of bendable smartphone

at the close of the exhibition CES-2018, traditionally held in Las Vegas, the South Korean company Samsung has decided to do a very narrow number of invited participants covered by the cameras flashing, the prototype demonstration...

French designers have developed a airplane bird

As you know, aircraft use wing design, "borrowed" from the birds. However, there is a "live" wing one advantage, which allows better control of the flight: wing are able to deform. But soon the aircraft can acquire such a useful f...

#CES 2018 | Submitted street lamp spy

this year's CES has given us a lot of very interesting announcements from various spheres of science and high technologies. One of the intriguing new startup called Wi-Fiber, which is a "smart" modular led street light. The flashl...

China will use face detection for registration of marriage

Now facial recognition is a technology that is primarily used to locate dangerous criminals and inform the authorities. But soon in China, it can find more "civilian" applications: during the marriage ceremony. Although in this si...

Tesla began installing solar roof tiles in homes of clients

Tesla started installing roofs of the first customers from among the customers. Installation, first worked on the homes of employees of the company. Square meter of the roof from Tesla and SolarCity costing customers $ 220, which ...

Proposed construction of a giant wind farm in the North sea

Most of the world's offshore wind power plants, usually located near the coast of the countries which they feed. However, the ambitious plan for a Dutch energy company offers to create the world's largest wind farm, and an adapted...

Telegram will carry out the ICO and will launch its own cryptocurrency chataway

a Platform for the exchange of encrypted messages Telegram, which is operated by Vkontakte founder Pavel Durov, plans to launch its own blockchain-based platform and cryptocurrency, adding the option to make payments in your appli...

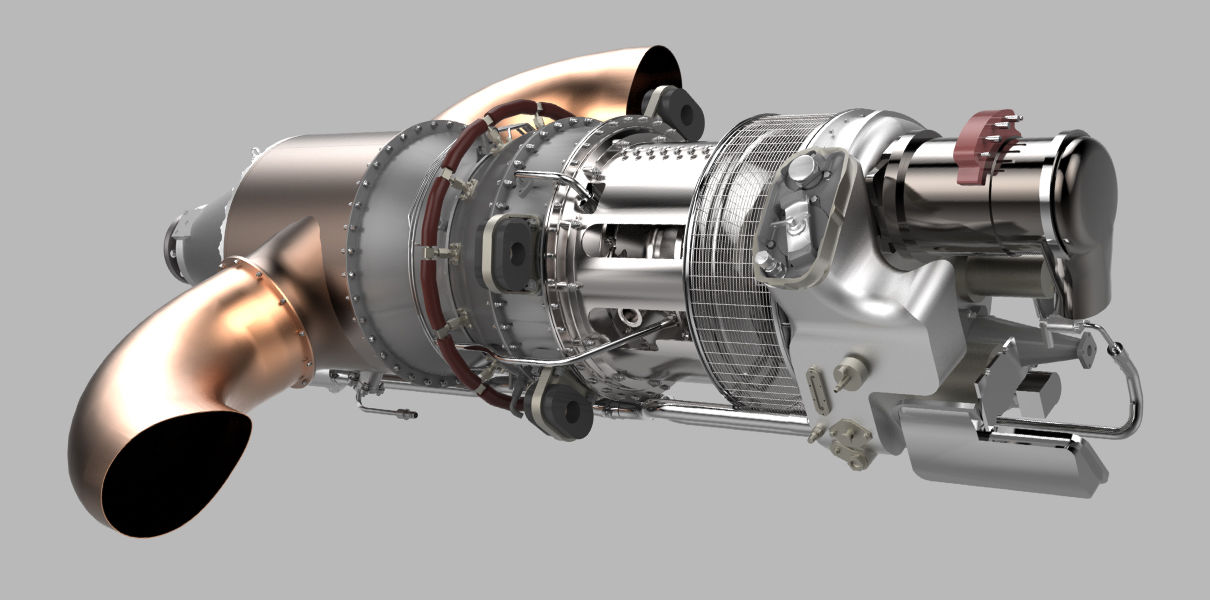

General Electric has printed and tested a turboprop engine

a Division of General Electric Aviation conducted the full test of the turboprop engine printed on a 3D printer. Thanks to modern technology, time to develop a new engine has been reduced from ten to two years, and the number of p...

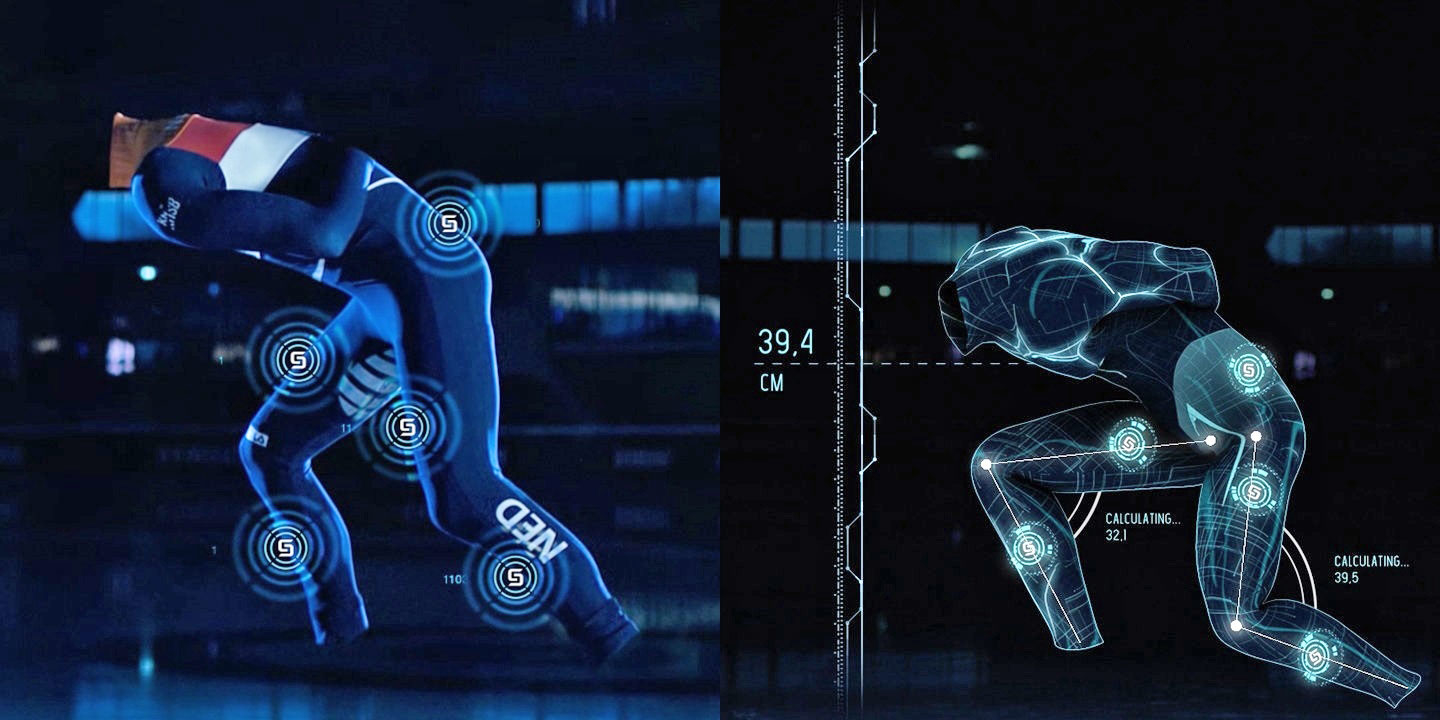

Samsung smart suits help the athletes prepare for the Olympic games

the South Korean company has always been very proud that is one of the largest sponsors of the Olympic games. Winter games in 2018 in Pyeongchang will not be for the company's exception. Only now Samsung is the sponsor of this eve...

Panasonic has introduced a unique exoskeleton

Exoskeletons have long ceased to be the lot of science fiction movies and video games, and many companies are already developing a variety of models of such devices. But if most of the developments used in military training or for...

The world's largest amphibious aircraft made its first flight

the world's largest seaplane – Chinese — made its first test flight from the airport of Zhuhai, located in Guangdong province, according to the publication Popular Mechanics. With the size of the civilian airliner, AG600 abl...

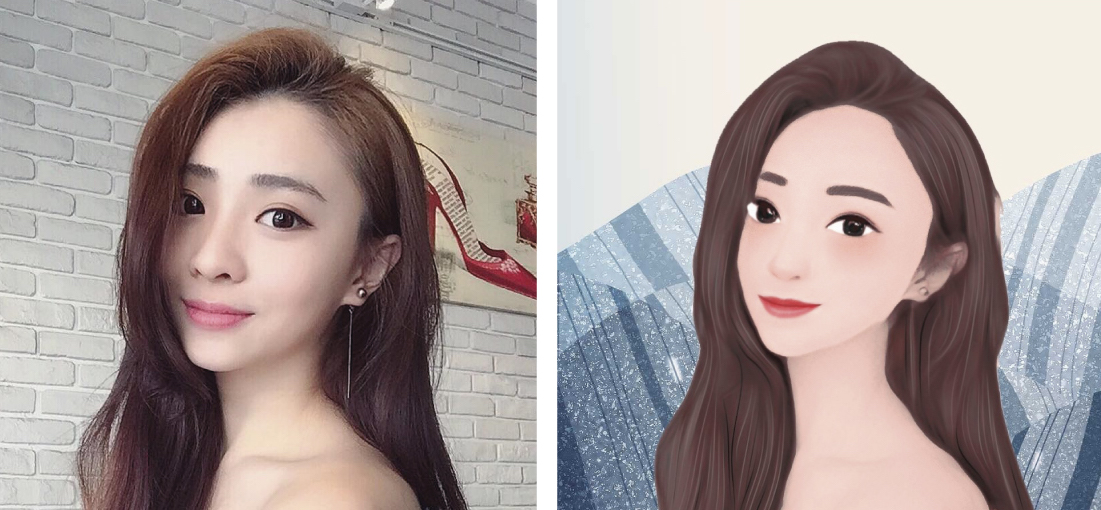

In the application Meitu appeared robot artist, designed with the help of AI

Few who have not heard about , which is using the latest technology to improve the user images. This time the creators of the app decided to go ahead and have built in Meitu a trained robot artist, designed using artificial intell...

How are things with LeEco? Spoiler: not really

the Attempt of the Chinese company LeEco to make a forced March to the American market, and suddenly his win was more unsuccessful than expected. Thrown into this venture, the money burned a big hole in the pocket of the company t...

Musk has promised to release a Tesla pickup truck

the Irrepressible Elon Musk doesn't want to stay in his quest to revolutionize the car market. Not much time has passed since the presentation of the Tesla electric truck and the second generation of the Roadster, as the legendary...

Baidu will be built in China AI-city

Sometimes it seems that the fantastic city of the future, based on the use of high technology will never be created and will remain dreams, because in order to "modify" the modern city requires huge resources! However, the Chinese...

Every Monday in the new issue of «News high-tech» we summarize the previous week, talking about some of the most important events, the key discoveries and inventions. This time we will focus on One Hyperloop, a supersoni...

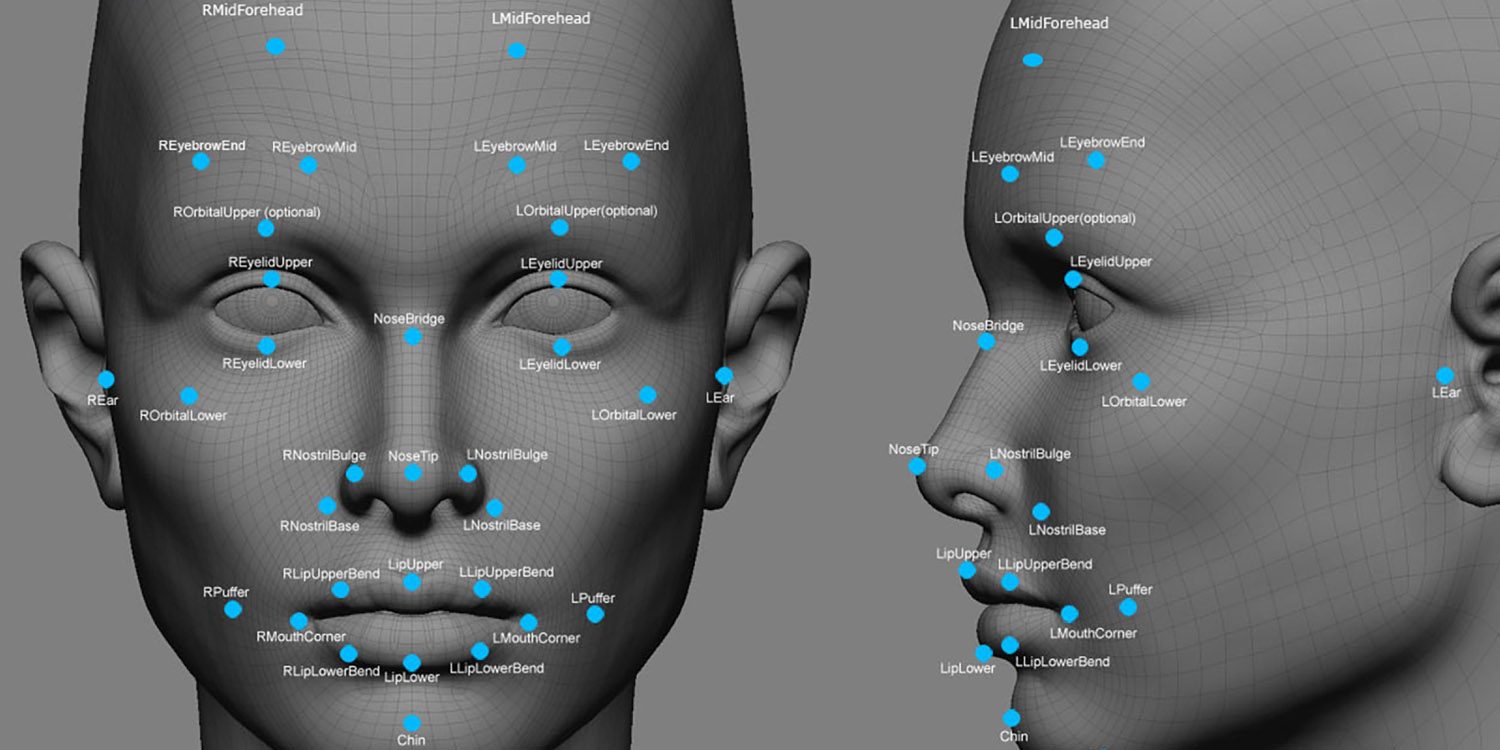

Face recognition: how it works and that it will continue?

You climb the stairs and enter the Elevator. He knows what floor you need. The door opening in front of you. A computer and a phone "know" you and not require a password. Cars, social networks, shops greet you on sight, calling yo...

Scientists have created a neural network with psychic abilities

One of Clarke's laws States: any sufficiently advanced technology is indistinguishable from magic. And if a few centuries ago, the most accurate way to know the future was to go to the shaman or the fortune teller that nothing but...

Bitcoin: a misconception that can conquer the world

Now the price of the cryptocurrency is absolutely unreal. However, for the money. A bar of gold. Disk of iron. A chain of beads. Card of plastic. Cotton roll. These things are useless. You can't eat, drink, and shelter them. But t...

Comments (0)

This article has no comment, be the first!