The new algorithm has brought us to a full simulation of the brain

Source:

Source:Renowned physicist Richard Feynman once said: "What I cannot create, I do not understand. Learn how to solve each problem that was already solved". The scope of neuroscience, which is increasingly gaining momentum, took Feynman's words to heart. For neuroscientists, theorists the key to understanding how the intelligence will be his recreation inside of your computer. Neuron for the neuron, they are trying to restore the neural processes that give rise to thoughts, memories or feelings. Having a digital brain, scientists are able to test our current theory of knowledge or to explore the parameters that lead to disruption of brain function. As suggested by philosopher Nick Bostrom of Oxford University, an imitation of human consciousness is one of the most promising (and laborious) way to recreate — and surpassing — human ingenuity.

There's only one problem: our computers can't cope with the parallel nature of our brains. In polutorachasovom body twisted more than 100 billion neurons and trillions of synapses.

Even the most powerful supercomputers today are behind these scales, like the K computer of Advanced Institute for computational science in Kobe, Japan, can process no more than 10% of the neurons and their synapses in the cortex.

Part of the slack associated with the software. Becomes the faster computer equipment, the more the algorithms become the basis for a full simulation of the brain.

This month, an international group of scientists has completely revised the structure of popular algorithm of simulation by developing a powerful technology that radically reduces the computation time and memory usage. The new algorithm is compatible with different kinds of computing equipment, from laptops to supercomputers. When future super-computers come on the scene — and they are 10-100 times more powerful than a current — algorithm is immediately applied on these monsters.

the"Thanks to new technology we can use the growing parallelism in modern microprocessors is much better than before," says study author Jacob Jordan, of the Research center Julia in Germany. The work was published in Frontiers in Neuroinformatics is.

"This is a crucial step towards the creation of technology to achieve the simulation of networks throughout the brain," the authors write.

the Problem of scale

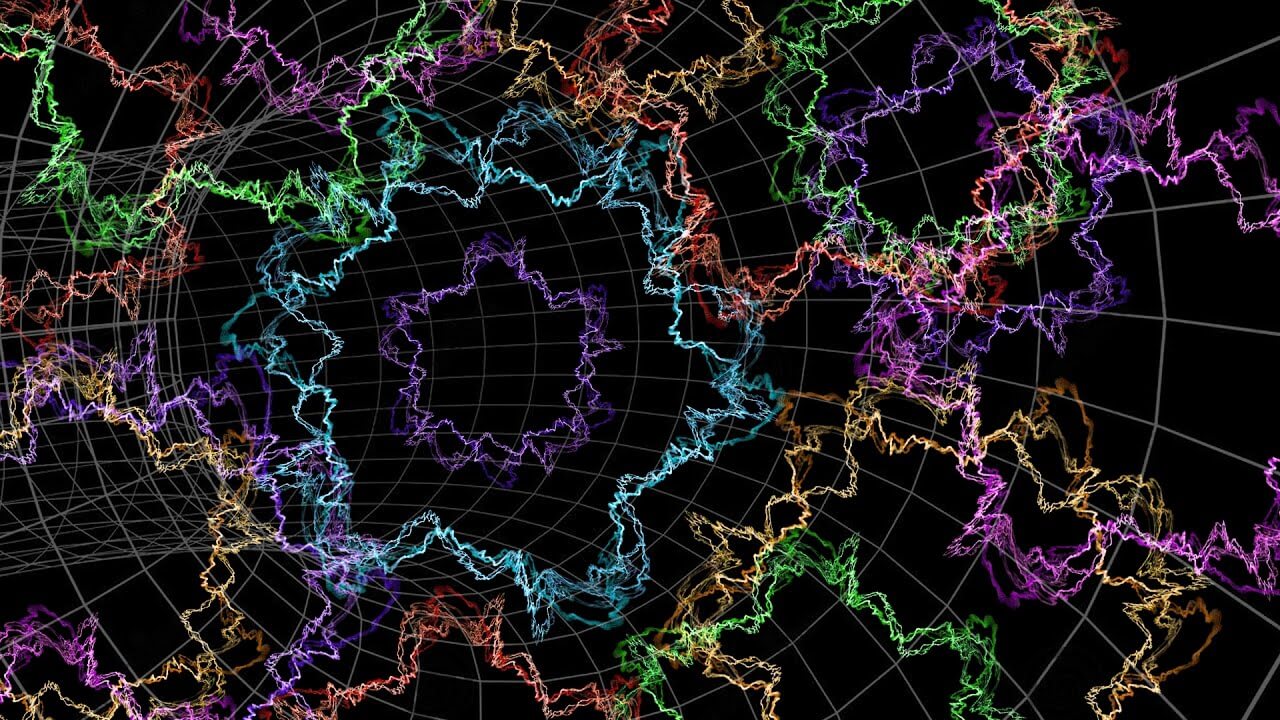

Modern supercomputers consist of hundreds of thousands of subdomains nodes. Each node contains multiple processing centers, which can support a handful of virtual neurons and their connections.

The Main problem in the simulation of the brain is how to effectively represent the millions of neurons and their connections in these centers to save on time and power.

One of the most popular simulation algorithms — Memory-Usage Model. Before scientists simulate changes in their neural network, they must first create all of these neurons and their connections in the virtual brain using the algorithm. But here's the catch: for each pair of neurons, the model stores all the information about connections at each node, which is the receiving neuron — postsynaptic neuron. In other words, the presynaptic neuron, which sends electrical impulses, shouting into the void; the algorithm must determine where a particular message, looking solely at the receiving neuron and the data stored in the node.

It May seem strange, but this model allows all nodes to build its part of the work in neural networks in parallel. This dramatically reduces the download time, which partly explains the popularity of this algorithm.

But as you may have guessed, there are serious problems with scaling. The sender node sends a message to all host of neural nodes. This means that each receiving node must sort each message in the network — even those that are designed to neurons located in other nodes.

This means that a huge part of the message is discarded at each node, specifically because there is no neuron to which it is addressed. Imagine that the post office sends all employees in the country to carry a desired message. Crazy inefficient, but it works as the principle model of memory usage.

The Problem becomes more serious with the growth of the size of the simulated neural network. Each node needs to allocate storage space for the memory "address book" that lists all neural inhabitants and their relationships. In the scale of billions of neurons "address book" becomes a huge swamp of memory.

theSize or source

Scientists have cracked the problem by adding in the algorithm the index.

Here's how it works. The receiving nodes contain two pieces of information. The first is a database that stores information about all the neurons-the senders that connect to the nodes. Since synapses are of several sizes and types, which differ in memory usage, the database also sorts your information depending on the types of synapses formed by the neurons in the node.

This setting is already significantly different from previous models in which the associations were sorted from the incoming source of neurons, and the type of synapse. Because of this, the node will no longer have to support the "address book".

"the Size of the data structure thus ceases to depend on the total number of neurons in the network," explain the authors.

The Second block stores data about the actual connections between the receiving node and the sender. Like the first unit, it organizes data according to the type of synapse. In each type of synapse are separated from the data source (the sending neuron).

Thus, this algorithm is specific to its predecessor: instead of storing all the connection information in each node, the receiving nodes store only those data which correspond to virtual neurons in them.

The researchers also gave each of the sending neuron to the target address book. During transmission, data is split into pieces, each fragment that contains code, postal code, sends it to the appropriate receiving nodes.

theFast and smart

Modification of the work.

In trials, the new algorithm showed themselves much better than their predecessors, in terms of scalability and speed. On the supercomputer JUQUEEN in Germany, the algorithm worked by 55% faster than previous models on the random neural network, mostly thanks to its straightforward scheme of data transfer.

The network the size of half a billion neurons, for example, simulation of one second of biological events took about five minutes of work time on JUQUEEN new algorithm. Model-predecessors took in six times more time.

As expected, several scalability tests showed that the new algorithm is much more efficient in the management of large networks, as it reduces the processing time of tens of thousands of transfers data three times.

..."the focus Now is on accelerating the simulation in the presence of various forms of network plasticity", — concluded the authors. With this in mind, finally, digital human brain may be within reach.

Recommended

What will be the shelter for the first Martian colonists?

Mars is not the friendliest planet for humans While the Red Planet is roaming rovers, researchers are pondering the construction of shelters and materials needed by future Martian colonists. The authors of the new paper suggest that we could use one ...

New proof of string theory discovered

Just a few years ago, it seemed that string theory was the new theory of everything. But today the string universe raises more questions than answers String theory is designed to combine all our knowledge of the Universe and explain it. When she appe...

What is the four-dimensional space?

Modeling camera motion in four-dimensional space. View the world in different dimensions changes the way we perceive everything around, including time and space. Think about the difference between two dimensions and three dimensions is easy, but what...

Related News

What is a man? Our bacteria can be our masters and not Vice versa

When you were young, everybody told you that you are unique and individual. The idea of individuality has been around for many centuries, but the more we learn about our bodies, the more biologists suspect that the microorganisms ...

China wants to lead the global development of artificial intelligence

it Turns out that China is not just powerfully invests in artificial intelligence. Turns out his experts set out to establish global standards for this technology. Academics, researchers, industry, and government experts gathered ...

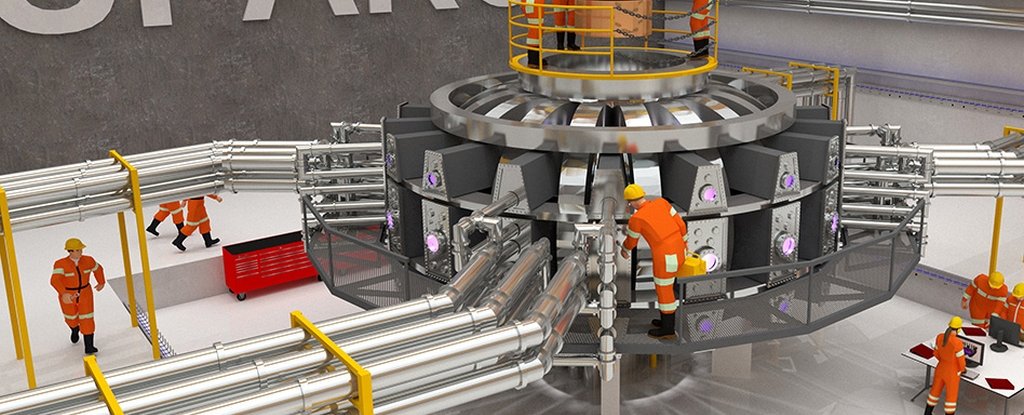

A new startup from MIT set out to run a fusion reactor in 15 years. Seriously?

one well-Known anecdote: nuclear fusion is twenty years. Always will be in twenty years. The joke is now no longer funny, grew out of the optimism of scientists who in the 1950-ies (and in each subsequent decade) thought that nucl...

We still do not understand why time goes only forward

Every passing moment brings us from the past through the present into the future, and there's no way back: the time always flows in one direction. It is not in place and does not go in reverse; arrow of time always points forward ...

Stephen Hawking, hoped that M-theory will explain the Universe. What is this theory?

legend Has it that albert Einstein spent his last hours on Earth, tracing and drawing something on a piece of paper in the last attempt to formulate a theory of everything. 60 years later and another legendary scientist in theoret...

Scientists have created "the white" material

Which color can be considered to be perfectly white? A starched shirt? Medical gown? Or maybe a sheet of paper from the nearest printer? All this, of course, true, but a group of scientists from Cambridge University have managed t...

This student was... How Einstein became the most popular scientists of the galaxy

At the end of 2017 at the auction in Jerusalem was exposed to a sentence of thirteen words, handwritten in German by albert Einstein. In the city archives of Einstein, which he bequeathed before his death in 1955 the Hebrew Univer...

At the age of 76 years died the physicist Stephen Hawking

the Famous British theoretical physicist died on 77-m to year of life, according to BBC radio broadcasting organization with reference to the representatives of the family of the scientist. In 1963, the doctors gave Hawking a disa...

At MIT found a way to harness the energy of stars

Nuclear energy – the dream of scientists and energy companies may soon become a reality. Of physics at the Massachusetts Institute of technology (MIT) and the Commonwealth Fusion Systems has declared readiness to create a working ...

Dutch scientists have developed a DNA test for the detection of illegal felling of trees

DNA Tests is no surprise, however, scientists from Holland have managed to find the application of long-known technique: the new DNA test will be used to benefit the environment, namely to determine how the law was made felling of...

#video | Found a way of 3D printing jewelry from liquid metal

In the distant 90-ies Director James Cameron in the second part of the film «Terminator» presented to the robot from liquid metal T-1000. Then such behavior of the metal (with the exception of mercury and of some experim...

Can artificial photosynthesis be an alternative to solar panels?

In 1912, in the journal Science published an article in which Professor Giacomo Ciamician wrote: "Coal is solar energy offers to mankind in its most concentrated form, but the coal is exhausted. Is fossil solar energy the only one...

Svalbard global seed vault, it was decided to improve

On the island of Spitsbergen, located in the Arctic ocean in 2006 was built a unique structure – the world Bank-selenoprecise. It was decided to store planting material for almost all agricultural plants existing in the world, in ...

In the future we are not going to edit the genome. We will create a new

ever since the Sumerians first want to drink beer, and that was thousands of years ago, Homo sapiens had close relationships with Sacharomyces cerevisae, unicellular fungi known as yeast. Due to the fermentation that people can us...

For the first time, scientists want to move the stuff from one place to another

We all seen and read about how the hero of some sci-Fi movie or book flying on a spaceship that uses as fuel the antimatter, and then landed on a hostile planet, pulls out his Blaster, charges of antimatter and... What happens nex...

Every fossil is a small miracle. As noted by bill Bryson in his book "a Short history of almost everything", just one bone in a billion becomes a fossil. In such calculations, all fossilized legacy of the 320 million people living...

Scientists have invented a new way to store data inside DNA

the Future of technology lies not only in the constant growth of computing power of processors or the transition to quantum computers, but also in the evolution of storage devices. Humanity generates a huge amount of information, ...

Five amazing facts about our ancestors that we have learned from DNA

Not so long ago scientists used DNA of one of the oldest English skeletons (10,000 years) to find out how it looked the first inhabitants of Britain. However, ancient DNA of the skeleton is taken not for the first time and reveals...

Scientists got the "impossible" form of ice that can only exist on Uranus

a team of scientists from Lawrence Livermore National laboratory Lawrence had a unique form of ice, called superionic ice (superionic ice). The main feature of this ice is that it consists of a solid crystal lattice of oxygen atom...

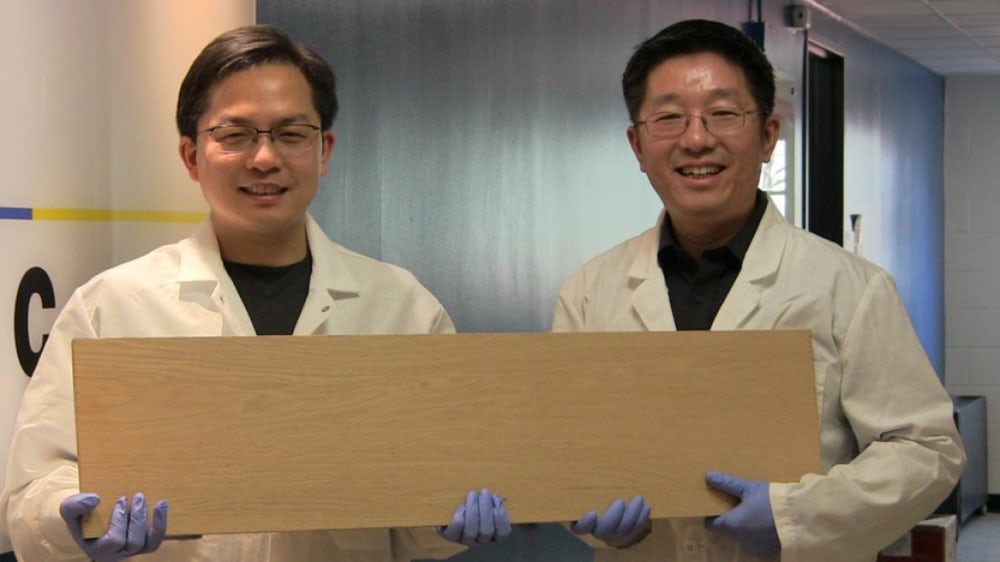

Created superdivision, on the strength comparable to metal

Titanium alloys, perhaps, one of the most durable materials on our planet. But they have two very unpleasant drawback: they are very heavy and very expensive. Scientists from the University of Maryland (UMD) have invented an alter...

Comments (0)

This article has no comment, be the first!