Artificial intelligence Geoffrey Hinton: the father of "deep learning"

Source:

Source:Artificial intelligence. How much is said about him, but we even say it has not really begun. Almost everything you hear on the progress of artificial intelligence, based on the breakthrough, which thirty years. Maintaining progress will require bypass serious limitations serious limitations. Further, in the first person — James Somers.

I stand there, what will soon be the center of the world, or just in a big room on the seventh floor of a gleaming tower in downtown Toronto — which side to see. I am accompanied by Jordan Jacobs, co-founder of this place: Institute of Vector, which this fall opens its doors and promises to be the global epicentre of artificial intelligence.

We are in Toronto, because Geoffrey Hinton in Toronto. Geoffrey Hinton is the father of deep learning, techniques, underlying the hype on the subject of AI. "In 30 years we will look back and say that Jeff — Einstein AI, deep learning, just that we call artificial intelligence," says Jacobs. Of all the AI researchers Hinton quoted more often than the three behind him, combined. Its students and graduates go to work in the AI lab in Apple, Facebook and OpenAI; Sam Hinton, a leading scientist at Google Brain AI. Virtually any achievement in the field of AI over the past ten years — in translation, speech recognition, image recognition and games — or work of Hinton.

The Institute of Vector, this monument to the ascent of ideas of Hinton, is a research centre in which companies from all over the US and Canada — like Google, Uber, and NVIDIA are sponsoring the efforts of technology commercialization AI. The money flow faster than Jacobs manages to ask about it; two of its co-founders interviewed companies in Toronto and the demand for experts in the field of AI was 10 times higher than Canada delivers each year. Institute the Vector in a sense untilled virgin soil to attempt to mobilize the world around deep learning: in order to invest in this technique to teach her to hone and apply. Data centers are being built, skyscrapers are filled with startups, to join an entire generation of students.

When you stand on the floor, "Vector", one gets the feeling that you are at the beginning of something. But deep learning, in its essence, very old. A breakthrough article by Hinton, written together with David Rumelhart and Ronald Williams, was published in 1986. The work describes in detail the method of back propagation of errors (backpropagation), "backprop" for short. Backprop, according to John Cohen — it is "all based on what deep learning — all".

If you look at the root of today AI is deep learning, and deep learning is backprop. And it's amazing, given that backprop more than 30 years. To understand how it happened, just need: as the equipment could wait that long and then cause an explosion? Because once you learn the history of backprop, you will understand what is happening with AI, and also that we may not stand at the beginning of the revolution. We may in the end itself.

The walk from the Institute of Vector in office Hinton at Google, where he spends most of his time (he is now Professor Emeritus at the University of Toronto) is a kind of living advertisement for the city, at least in the summer. It becomes clear why Hinton, who hails from the UK, moved here in the 1980s after working at Carnegie — Mellon in Pittsburgh.

theMaybe we are not the beginning of the revolution

Toronto — the fourth largest city in North America (after Mexico city, new York and Los Angeles) and certainly more varied: more than half the population was born outside Canada. And this can be seen when walking around the city. The crowd is multinational. There is free health care and good schools, people are friendly, the policy with respect to the left and stable; all this attracts people like Hinton, who says he left the U.S. because of the "Irangate" (Iran-contra — a major political scandal in the United States in the second half of 1980-ies; then it became known that some members of the U.S. administration organized secret arms shipments to Iran, thereby violating the arms embargo against that country). This begins our conversation before dinner.

"Many believed that the US could invade Nicaragua," he says. "They somehow believed that Nicaragua belongs to the United States". He says that recently achieved a major breakthrough in the project: "I began working With a very good Junior engineer", a woman named Sarah Sabur. Sabur Iranian, and she was denied a visa to work in the United States. The Google office in Toronto took it.

Hinton 69 years. He has sharp, thin English face with a thin mouth, large ears, and a proud nose. He was born in Wimbledon and in the conversation reminds the narrator of a children's book about science: a curious, enticing, trying to explain. He's funny and a little grandstanding. It hurts to sit because of back problems, so he can't fly, and the dentist lays on the device, resembling a surfboard.

In the 1980s, Hinton was, as now, an expert in neural networks, is considerably simplified network model of neurons and synapses of our brain. However, at that time, it was firmly decided that the neural network is a dead end in AI research. Although the first neural network, "Perceptron" was developed in the 1960-ies and it was considered a first step towards machine intelligence at the human level, in 1969 Marvin Minsky and Seymour Papert mathematically proved that such networks can perform only the simplest functions. These networks had two layers of neurons: input layer and output layer. Network with a large number of layers of neurons between the input and output could, in theory, solve a wide variety of problems, but no one knew how to teach them, so that in practice they were useless. Because of "Perceptrons" from the idea of neural networks refused almost all with a few exceptions, including Hinton.

Breakthrough Hinton in 1986 was to show that the method of error back propagation can train a deep neural network with number of layers more than two or three. But it took another 26 years before increased computing power. In the article of 2012 Hinton and two of his student from Toronto has shown that deep neural networks are trained with the use of backprop, spared the best of the system of recognition of images. The "deep learning" has started to gain momentum. The world suddenly decided that the AI will take over. For Hinton, it was a long-awaited victory.

thethe reality distortion Field of

A Neural network is usually depicted as a sandwich whose layers are superposed on each other. These layers contain artificial neurons, which are essentially represented by small computing units that are excited — as excited by a real neuron and transmit this excitement to other neurons with which it is connected. Excitation of a neuron is represented by a number, say, 0.13 or 32.39, which determines the degree of excitation of the neuron. And there's another important number at each connection between two neurons, which defines how many excitations to be transmitted from one to another. This number models the strength of synapses between neurons of the brain. The higher the number, the stronger the Association, which means more excitement flows from one to another.

One of the most successful applications of deep neural networks is pattern recognition. Today there are programs that can recognize whether the picture of the hot dog. Ten years ago they were impossible. To make it work, first you need to take a picture. For simplicity, say it's black-and-white image of 100 pixels by 100 pixels. You feed it to a neural network, setting the excitation of each simulated neuron in the input layer so that it is equal to the brightness of each pixel. This is the bottom layer of the sandwich: 10,000 neurons (100 x 100), representing the brightness of each pixel in the image.

Then the large layer of neurons is connected to another large layer of neurons, already above, say, a few thousand, and they, in turn, to another layer of neurons, but less, and so on. Finally, the top layer of the sandwich layer output will consist of two neurons, one representing the "hot dog" and another as "not a hot dog". The idea is to train a neural network to excite only the first of these neurons, if the picture is a hot dog, and second, if no. Backprop, the method of error back propagation, which Hinton has built his career doing just that.

Backprop is very simple, although it works best with huge amounts of data. That's why big data is so important to AI why they so zealously engaged in Facebook and Google and why Vector Institute decided to establish contact with four leading hospitals of Canada and to exchange data.

In this case, the data take the form of a million images, some hot dogs, some without; the trick is to mark these images as having hot dogs. When you create a neural network for the first time, connections between neurons are random weight random numbers to say how much excitation is transmitted through each connection. If the brain synapses are not yet configured. The purpose of backprop to change these weights so that the network up and running: so when you pass a picture of a hot dog on the bottom layer, the neuron "hot dog" in the topmost layer is excited.

Suppose you take the first training a picture of a piano. You transform the intensity of the pixel image 100 x 100 10,000 numbers, one for each neuron of the lower layer network. As soon as the excitation is distributed over a network in accordance with the connection strength of neurons in the adjacent layers, gradually reaches the last layer, one of the two neurons that determine the picture of the hot dog. Because it is a picture with a piano, the neuron "hot dog" needs to show zero, and the neuron is "not a hot dog" should be...

Recommended

Can genes create the perfect diet for you?

Diet on genotype can be a way out for many, but it still has a lot of questions Don't know what to do to lose weight? DNA tests promise to help you with this. They will be able to develop the most individual diet, because for this they will use the m...

How many extraterrestrial civilizations can exist nearby?

If aliens exist, why don't we "hear" them? In the 12th episode of Cosmos, which aired on December 14, 1980, co-author and host Carl Sagan introduced viewers to the same equation of astronomer Frank Drake. Using it, he calculated the potential number ...

Why does the most poisonous plant in the world cause severe pain?

The pain caused to humans by the Gimpi-gympie plant can drive him crazy Many people consider Australia a very dangerous place full of poisonous creatures. And this is a perfectly correct idea, because this continent literally wants to kill everyone w...

Related News

Chinese robots-researchers will fly to the moon instead of people

All work on the study of the moon will be in automatic mode, — China Daily, citing the high-ranking officer of China information. The lunar exploration program, including landing Rovers and research existing plans of the Chi...

DARPA invests $ 100 million in the development of genetic weapons

according to the Guardian, the Office of advanced research projects USA (DARPA) began developing weapons through genetic engineering. To work on the project, the Department has allocated 100 million U.S. dollars. New weapons will ...

Bacteria could be turned into nanobots

Nanobots could be very useful for a variety of things: they could conduct operations to explore previously inaccessible places, to diagnose the body and deliver drugs in specific locations of the human body… However, that is...

Scientists have added two new letters to the genetic code

As is known to encode a huge amount of information in the genetic code, used only 4 nucleic acids: adenine, guanine, thymine and cytosine. In the genetic code, they are marked with the corresponding letters — A, G, T and C. ...

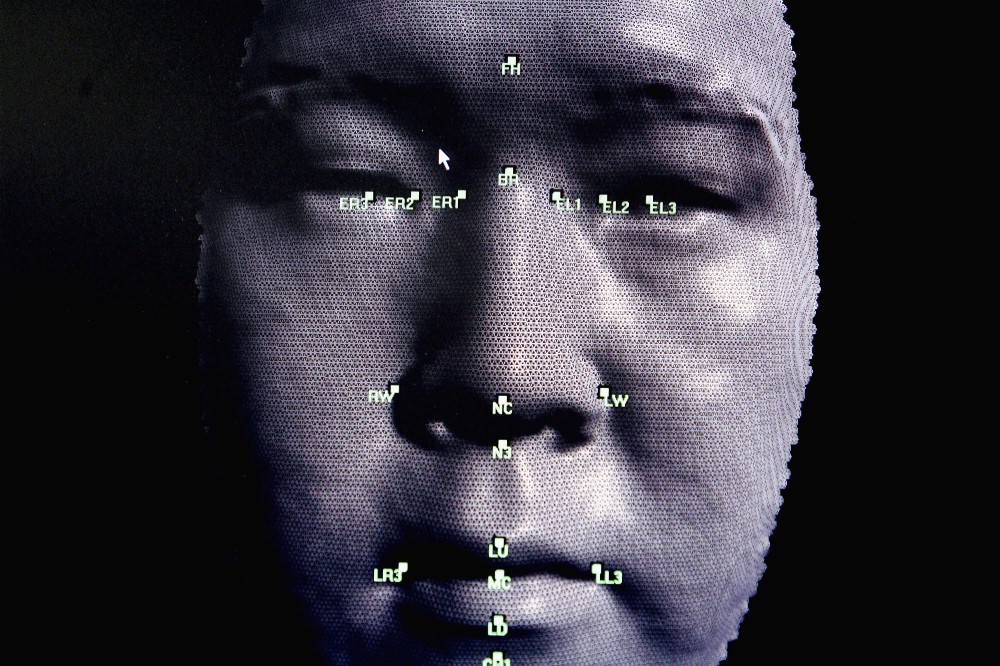

Diseases will be identified, just by scanning the retina

Periodic diagnostics and preventive examinations help to reveal a lot of disease at an early stage, but some conditions can be diagnosed only when the disease is already quite long. In addition, a life-threatening pathology can't ...

Created the first semi-synthetic bacteria with synthetic DNA

All biological life on planet Earth is based on four nucleic acid (nitrogenous) bases of DNA: A, T, C and G (adenine, cytosine, thymine and guanine). But what if man will be able to create a new artificial nucleic acid bases and s...

Astronomers have discovered 72 new galaxy

Through the use of a new scientific instrument MUSE (Multi Unit Spectroscopic Explorer) is installed on the Very large telescope (VLT) the European southern Observatory in Chile, astronomers were able to detect 72 of the new galax...

Earthworms are able to live and reproduce in the Martian soil

Very interesting experiment was conducted by the employees in the Netherlands. They asked the American space Agency NASA for a copy , recreated on the basis of data obtained from several Mars missions, and then placed in the groun...

Astronomers have discovered an exoplanet with an orbital period of 27 000 years

Each planet in our Solar system has considerably different from the rest of the orbital period of rotation around the Sun. For example, if the Earth's one revolution around the star takes exactly 365.25 days, the same Mars it take...

Artificial intelligence will plunge into the universe of molecules in the search for amazing drugs

Dark night, away from city lights, the stars of the milky Way seem innumerable. But from any point visible to the naked eye not more than 4500 stars. In our galaxy, they are 100 to 400 billion galaxies in the Universe, and even mo...

Scientists have discovered how different types of alcohol affect emotions

for anybody not a secret that alcohol is directly associated with the emotional state of a person. It lifts the mood, and someone, on the contrary, depressing. Someone liberates alcohol, and someone makes a more aggressive and com...

How to increase the ability of the brain?

it is Known that with aging, all the reserves in our body are depleted and the organs and tissues gradually start to lose their function. According to numerous studies, after 40 years, the volume of the human brain decreases on av...

Is matter leave the event horizon during the merger of black holes?

as soon As you get to the event horizon of a black hole, you will never leave. There is no speed that you could gain, even the speed of light to allow you to enter. But in General relativity space is curved in the presence of mass...

The Amish have discovered a mutation that overcomes diabetes, and even aging

the Amish, also known as the Amish, — it is a religious community living in several US States and in Canada. The Amish are distinguished by their simplicity of life, dress, and reluctance to adopt most modern technologies an...

The first explosion at Chernobyl was a nuclear, say scientists

the Accident at the Chernobyl NPP occurred on 26 April 1986, has become one of the worst in the history of mankind. Previously it was thought that the explosion at the fourth unit was because in the experiment, the uncontrolled he...

In New Zealand proposed to deal with animal pests by using CRISPR

the Technology of gene editing CRISPR finds more and more different applications. In New Zealand, for example, decided to get rid of rodents and other pests. Mouse possums, rats and other rodents cause New Zealand a huge financial...

In China want to build a space Shuttle with a nuclear engine

In a new report published by the Chinese Corporation of space science and technology (CASC) is dedicated to the space program of China, contains many ambitious goals that the Corporation aims for the next 20 years. Some of them En...

To predict how will the evolution of people, impossible

we All know how the Neanderthals looked like: a prominent brow, thick nose, elongated skull, powerful bone structure, and probably red hair and freckled skin. You may look askance at the redheads when they meet in the subway, but ...

Scientists have discovered another promising templatebuy planet

Just 11 light years from the Solar system astronomers have discovered a new earth-like planet with a climate that can be expected to come to life. It should be noted that after Proxima b is the second closest exoplanet to us to ha...

13 scientifically proven signs that you are smarter than you think

Everyone wants to appear more modest. "Who is smart? I? No, I just got lucky..." this is Due, perhaps, primarily because most of us don't want to be the object of attention or someone's prejudice. Of course, there are those who ju...

Comments (0)

This article has no comment, be the first!