Google AI taught to be "extremely aggressive" in stressful situations

Source:

Source:Last year, the famous theoretical physicist Stephen Hawking said that the development of artificial intelligence would be "either the best or worst event for all mankind." We all watched "the Terminator" and we all imagine how apocalyptic hell may be our existence, if such a self-aware AI system like Skynet, one day decides that humanity no longer needs it. And the latest results of the new AI system from the company DeepMind (owned by Google) only once again remind us of the need to be extremely careful when manufacturing robots of the future.

In studies conducted at the end of last year, AI from DeepMind acquired and demonstrated, regardless of what is laid to his memory, and defeated the best players in the world . In addition, he perfected his skills and mimicry of the human voice.

In the latest test, the researchers checked "her desire" to cooperate. Tests showed when the AI DeepMind "feels" that lose, for the avoidance of loss he begins to choose a new and «extremely aggressive» strategy. The Google team spent with AI, 40 million sessions in a simple computer game Gathering, where the player was required to collect as much desired fruit. AI DeepMind operated with two "players agents" (a blue cube and a red cube). Google engineers were given the task to organize a competition between the «agents» and collect as much virtual apples (green squares) as possible.

From time To time, while "agents" could easily assemble fruit, available in large quantities, everything went smoothly. But as soon as the supply of apples decreased, the behavior of "agents" became "aggressive." They began to actively use the tool (laser beam), which helped to dislodge the enemy behind the game screen and then to collect all the apples

Interestingly, knocking out of the enemy from the field with a laser beam no further awards are proposed. Broken the enemy is just some time is outside of the screen, while more successful, the enemy was able to gather more virtual apples.

If the "agents" did not use laser beams, then theoretically the number of their apples would be the same. It's basically happened when as "agents" was used more low-level and "less intelligent" options DeepMind. More aggressive behavior, sabotage and greed began to show only when Google began to use more and more complex forms of DeepMind.

When the researchers used as "agents" more than a simple network DeepMind, between them noted the presence of "atmosphere more friendly coexistence on the playing field." However, when the managing agents was transferred more and more complex forms of networking, AI became more aggressive and began to try in advance to knock the opponent from the playing field to first faster to get to the lion's share of production from virtual apples. Google scientists assume that the more intelligent an agent, the more effective it is able to learn, to adapt to the environment and the methods available, and eventually come to use the most aggressive tactics to win.

"This model shows that the result of learning and adaptation to environmental conditions is a manifestation of certain aspects of human behavior" — says Joel Z. Leibo, one of the researchers who conducted this experiment.

"Less aggressive behavior occurred only when training and staying in a relatively safe environment, with less chance of consequences after certain actions. Greed, in turn, was reflected in the desire to overtake and to collect all the apples."

After the "harvest" DeepMind was offered to play another game called Wolfpack. This time it was attended by three AI-agent: the two played the role of wolves, and the other the role of prey. Unlike the game Gathering, new game, strongly contributed to the cooperation between wolves. First, it is easier to catch prey, and secondly, if both "wolf" were near pounded the prey, they both receive a certain reward, regardless of who it caught.

"the Idea is that mining can be dangerous. In addition, can lone wolf and able to drive, but there is a risk of loss in an attack by scavengers", — explains the command.

"But if both of them chase the prey together, then they are better able to protect her from the scavengers and thus generate a higher reward."

In General, from game Gathering AI DeepMind learned that aggression and selfishness are the most effective strategies for getting desired result in a particular environment. Wolfpack of the same system realized that cooperation, as opposed to the individual attempts could be the key to a more valuable reward in certain situations. Although described above test environment are only basic computer games – the main message is clear now. Take a different AI with competing interests in real-life situations, place in one environment, and maybe if their tasks are not kompensiruet common goal, the result may be a real war. Especially if people like one of elements in the achievement of this goal will be eliminated.

As an example, just imagine the traffic lights, AI and unmanned vehicles, attempt to find the quickest route. Everyone performs their tasks with the goal of getting the safest and most effective results for companies.

Despite the "infant" years DeepMind and the lack of any third-party critical analysis of its capabilities, the results of its tests suggest the following thoughts: even if we create, it does not mean that robots and AI systems will automatically put the desire to put our human needs above their own. We have to "plant kindness" in the nature of machines and to anticipate any "loopholes" that may allow them to reach those laser beams.

One of the main theses of the initiative group OpenAI, to study the ethics of artificial intelligence as something sounded in 2015 as follows:

..."Today's AI systems possess awesome, but highly specialized capabilities. And most likely, we still long time will keep in your improvement. At least as long as their ability to solve virtually every intellectual challenges will not be greater than the human. It is difficult to imagine what level of benefit you will be able to bring AI to human-level companies, as it is difficult to imagine what damage it can cause to society while the neglect of its creation and use."

Recommended

Can genes create the perfect diet for you?

Diet on genotype can be a way out for many, but it still has a lot of questions Don't know what to do to lose weight? DNA tests promise to help you with this. They will be able to develop the most individual diet, because for this they will use the m...

How many extraterrestrial civilizations can exist nearby?

If aliens exist, why don't we "hear" them? In the 12th episode of Cosmos, which aired on December 14, 1980, co-author and host Carl Sagan introduced viewers to the same equation of astronomer Frank Drake. Using it, he calculated the potential number ...

Why does the most poisonous plant in the world cause severe pain?

The pain caused to humans by the Gimpi-gympie plant can drive him crazy Many people consider Australia a very dangerous place full of poisonous creatures. And this is a perfectly correct idea, because this continent literally wants to kill everyone w...

Related News

Biologists continue to explore the process of cellular resurrection

Despite the fact that modern science is powerless against the death of the cells of a living organism, some researchers are optimistic about the future and even suggest that one day mankind will be available to a kind of "cellular...

Almost all new power plants of Europe get their energy from renewable sources

last year, the state of the European Union has a bunch of new power plants, with almost all of the produced energy comes from renewable sources. According to the data provided by the Association , 21 of 24 GWh of energy produced i...

Water will increase the battery life

Despite the fact that in the modern us, most often does not suit their capacity, the experts consider it the weakest of their short lifespan. Li-ion batteries last only a few years, after which their capacity begins to decline sha...

Created a brain implant that restores vision

Chip stimulating visual cortex of the brain and creates a simulated view without using his eyes, already Harvard specialists who are going to start to test it on primates in March of this year. In this case, the role of the eye wi...

Experiment: algae survived after 450 days in space

as part of an experiment aboard the International space station two types of naturally occurring algae all the time it is actually in outer space. The experiment was successful: both in the end survived. The results of this study ...

The Hubble telescope helped astronomers to detect the "murder" mega-comet "white dwarf"

the Hubble Space telescope continues to discover interesting. A few days ago him to capture the moment of the death star, located at a distance of over 5000 light years from Earth in the constellation the Poop, and yesterday, astr...

At the University of Oregon made a robot Walker

to Teach the robot to walk on my own two feet — not the easiest task, but Cassie doesn't seem at all tense about it and copes with the task. In the video posted below, the developers of Agility Robotics demonstrate how the r...

Why we hurt from hot and cold?

At first glance, the hot metal of the kettle and ice cube have nothing in common. But these two objects can cause pain. Strong heat and extreme cold have on the human skin is extremely unpleasant effects is that we know from child...

Proxima b: the planet of lost dreams?

we Have some bad news for those who have already planned your trip to the nearest exoplanet to the Earth. According to a new study by the space Agency NASA, planets in the habitable zones of stars-class "red dwarf" — includi...

Under the glaciers of Alaska discovered ancient pyramid

Global warming is undoubtedly a considerable danger to our planet. But there is this phenomenon another, more positive side. Due to the melting of glaciers, researchers had the unique opportunity to see under the ice, ancient pyra...

Astronomers from the Moscow state University has compiled a directory of 800 thousand galaxies

There are many directories, which contain information about various heavenly bodies, galaxies and stars. They regularly use, but they are not voluminous, informative and accurate, so astronomers often not enough information there,...

Volgabus company will allocate 200 million rubles for the development and testing of unmanned buses

Not long ago, we about electric modular platform , the concept of which was presented by the Russian company . The versatility of the platform is that on its basis it is possible to quickly make a small but functional vehicles or ...

The Stanford students made beer 5000 year old Chinese recipe

We know that our ancestors learned to make alcohol for many thousands of years ago. But to this day almost did not survive the original recipes of the ancient intoxicating beverage, and the surviving descriptions of their characte...

In Brazil discovered dozens of ancient structures like Stonehenge

According to the journal Proceedings of the National Academy of Sciences, recently in Brazil, in the area of tropical forests of the Amazon lowlands, a group composed of Brazilian, British and canadian researchers-archaeologists, ...

Created a tiny generator, producing energy from gastric juice

the Gastric juice has a rather complex composition including hydrochloric acid (its major component), bicarbonate, pepsinogen and pepsin, mucus and the enzyme Factor castle. Present in the stomach acid is able to effectively decom...

Study: sedentary lifestyles are not as harmful as was thought

many of you have Probably heard that the so-called sedentary lifestyle – that is, when a person is most of their time (usually at work) holds in a sitting position – very harmful to health. So it turns out that these are just horr...

In the artificial brain was able to grow blood vessels

Researchers at brown University located in Providence, United States created laboratory , which is then able to grow a network of blood vessels. Now they hope that with the help of this achievement will be better able to study man...

Fire, water, air, earth: the most dangerous place on Earth

Many of us, the weather was caught off guard, whether it's a sudden downpour on the way home or to work, or the scorching sun in the absence of a beach or any shelter. But it's all doable. There are on our planet where mother natu...

What do we know about prehistoric monsters lurking?

more than a hundred years of history about the lake monster called "Mokele-mbembe" of concern to researchers deep forests of Africa. What they found since then? In 1981, the message about living in the lake monster have attracted ...

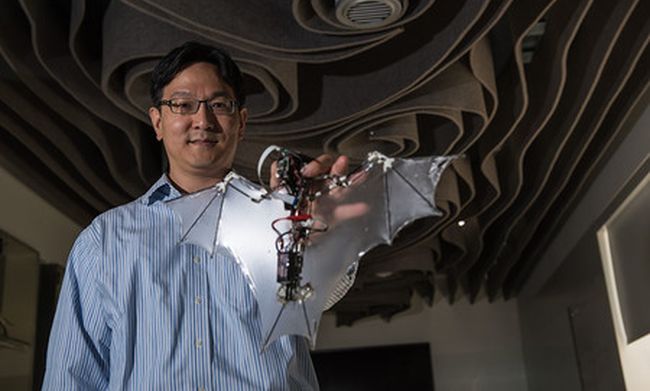

American engineers managed to create a robot-bat

fear Batman because American scientists are preparing you a decent change in the form of robotic bats. The world of science quite often in the realm of wildlife, here and this time employees of the California Institute of technolo...

Comments (0)

This article has no comment, be the first!