The fastest supercomputer in the world broke the record of artificial intelligence

Source:

Source:

On the West coast of America the most valuable company in the world trying to make artificial intelligence smarter. Google and Facebook brag experiments using billions of photos and thousands of high-performance processors. But at the end of last year, the project in the Eastern part of Tennessee quietly has surpassed the scale of any corporate artificial intelligence lab. And he was under the control of the U.S. government.

the

Government supercomputer USA breaks records

In record the project involved the world's most powerful supercomputer, Summit, located in the National laboratory of oak ridge. This machine took the crown in June last year, returning US title five years later, when the list was led by China. In the framework of the project climate research giant the computer is running on machine learning, which flowed faster than ever before.

The Summit, covering an area equivalent to two tennis courts, involved in this project, more than 27,000 high-end graphics processors. He used their power for learning algorithms deep learning, the same technology that underlies advanced artificial intelligence. In the process of deep learning algorithms perform the exercises at a speed of a billion billion operations per second, known in supercomputing circles as ecaflip.

"Earlier, deep learning has never reached this level of performance," says Prabhat, head of the research group at the National scientific computing center of energy research at the National laboratory behalf of the Lawrence Berkeley. His group collaborated with researchers at the headquarters of the "Summit", National laboratory of oak ridge.

As you might guess, the training AI is the world's most powerful computer was focused on one of the biggest problems in the world — climate change. Technological companies train algorithms to recognize faces or traffic signs; government scientists have been trained to recognize weather conditions like cyclones by climate models, which compress a century of predictions of the Earth's atmosphere in three hours. (It is unclear, however, how much energy has requested the project and how much carbon was released into the air in the process).

The Summit Experiment matters to the future of artificial intelligence and climatology. The project demonstrates the scientific potential of the adaptation of deep learning to the supercomputers, which traditionally simulate physical and chemical processes, such as nuclear explosions, black holes, or new materials. It also shows that machine learning could benefit from more computational power — if you can find it — and provide breakthroughs in the future.

"We didn't know that this can be done in this scale before you do it," says Rajat Monga, engineering Director for Google. He and other "goglova" helped the project, adapting the software machine learning TensorFlow open source company for giant scale Summit.

Most of the work on scaling deep learning was conducted in the data centers of Internet companies, where the servers work together on problems, separating them, because it is located relatively in isolation, not connected in one giant computer. The same supercomputers like Summit have a different architecture with specialized high speed connections that connects them to thousands of processors in a single system which can operate as a single unit. Until recently has been relatively little work on adapting machine learning to work with this kind of hardware.

Monga said that the work on adaptation TensorFlow to the scope of Summit will also contribute to the efforts of Google to expand its internal artificial intelligence systems. Nvidia engineers have also participated in this project, making sure that tens of thousands of Nvidia in this machine running smoothly.

Finding ways to use more computing power to algorithms, deep learning has played an important role in the current development of technology. The same technology, which uses Siri for voice recognition and Waymo cars to read road signs, have been useful in 2012 when scientists adapted it to work on Nvidia.

In the analysis, published in may last year, scientists from the OpenAI, a research Institute in San Francisco, founded by Elon Musk, has estimated that the amount of computing power in the largest public experiments with machine learning doubles roughly every month 3.43 since 2012; this will mean an 11-fold increase for the year. This progression helped bot from Alphabet to win in a complex Board and video games, and also contributed to a significant increase in the accuracy of Google translator.

Google and other companies are currently developing new types of circuits adapted AI to continue this trend. Google States that "pods" with closely spaced thousands of its chips AI — tensor duplicated processors, or TPU — can provide 100 petaflops of computing power that is one tenth of the speed reached by the Summit.

The Summit project's Contribution to the science of climate shows how the AI of the giant scale can improve our understanding of future weather conditions. When the researchers generate the hundred years predicting the weather, reading the received forecast becomes challenging."Imagine that you have a movie on YouTube that is 100 years old. There is no way to find all the cats and dogs in this film by hand," says Prabhat. Usually to automate this process, software is used, but it is not perfect. The results of the "Summit" showed that machine learning can do it much better, which should help in the prediction of storm impacts like flooding.

According to Michael Pritchard, Professor, University of California, Irvine, run deep learning on supercomputers is a relatively new idea that appeared at a convenient time for researchers of climate. The slowdown in the improvement of CPUs has led to the fact that the engineers began to equip supercomputers increasing number of graphics chips that productivity has risen more steadily. "The time has come when you can no longer increase the computing power in the usual way," says Pritchard.

This shift has made traditional simulation to a standstill, and so had to adapt. It also opens the door for harnessing the power of deep learning that is naturally suited for graphics chips. Maybe we will get a clearer idea about the future of our climate.

And how would use such a supercomputer you? Tell us .

Recommended

Is it possible digital immortality and whether it

when will man become immortal through digital technologies. I don't believe it. And you? In 2016, the youngest daughter Jang JI-sen This died of the disease associated with the blood. But in February, the mother was reunited with her daughter in virt...

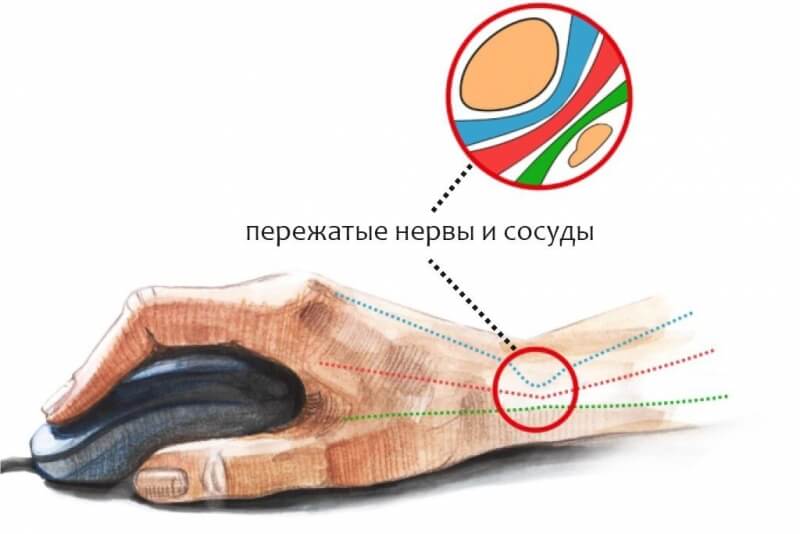

Why bad long sit at the computer and how to fix it

I've recently conducted a small survey among friends and acquaintances about how they evaluate their effectiveness when working remotely. Almost everyone I know — now work from home with computer and phone. And, as it turned out, even those who...

Parametric architecture: can artificial intelligence to design cities?

When you think about the future, what pictures arise in front of your eyes? As a lover of retro-futurism – a genre which is based on representation of the people in the past about the future, I always imagined the city of the future built buildings, ...

Related News

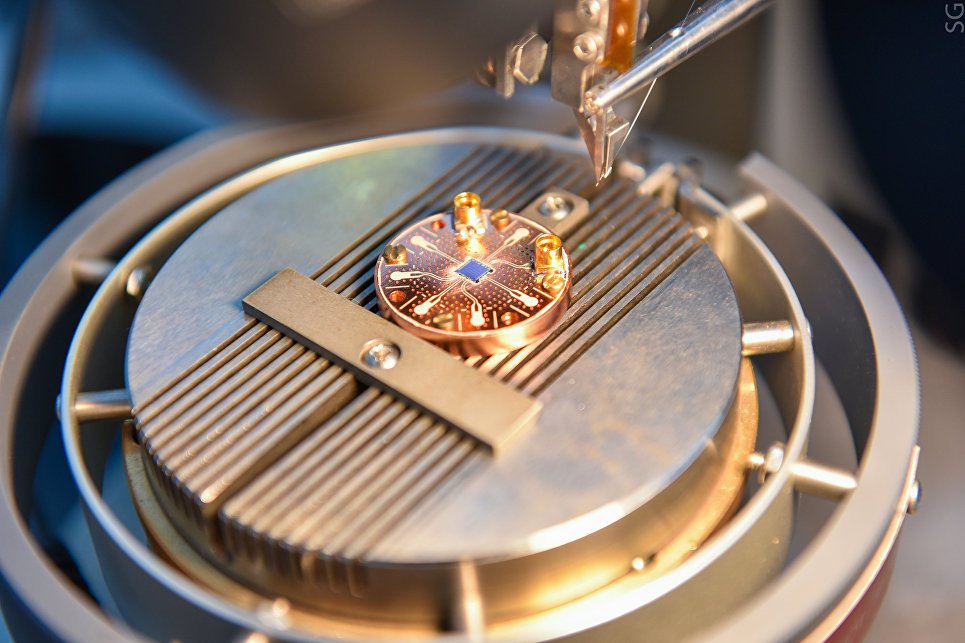

Physicists have calculated the time of the state of superposition of graphene qubits

the Possibility of practical use of quantum computers one step closer thanks to graphene. Experts from the Massachusetts Institute of technology and their colleagues from other research institutions were able to calculate the time...

At MIT used a biological virus in order to speed up your computer

whenever the computer (and any other electronic device) processes the data, there is a small delay, that is to say, the transfer of information "from one equipment to another" (e.g. from the memory to physical). The more powerful ...

New particles could open the way to photonic computers

All modern electronic devices use to transmit information of the electrons. Now in full swing, the development of quantum computers, which many consider the future replacement of traditional devices. However, there is another, no ...

New computer type architecture of the brain can improve data processing methods

Scientists from IBM are developing a new computer architecture that will be better suited to handle increasing volumes of data coming from algorithms of artificial intelligence. They draw their inspiration from the human brain, an...

The company D-Wave has launched an open and free platform for quantum computing

With the wide spread of quantum computers needs to produce a real revolution in the field of computer science, providing not only extra power but also improved performance in cybersecurity. We already have quantum computers, but ...

Excursion to the Museum of computers that changed the world

For some reason, old computers don't become classics. Few people contains them with the same concern as contain antique furniture or cars. Probably the reason that they are not suitable for use in the modern world even though the ...

The Intel found 3 vulnerabilities. They allow you to steal

Today Intel announced three new vulnerabilities of their processors. According to the American company, these vulnerabilities can be exploited to gain access to some data stored in the computer memory. Under the threat of a proces...

9-th generation Intel CPU with 8 cores will be presented October 1

there were rumors that Intel introduced the 9 generation of processors in October. Although the 10-nanometer chips Cannon Lake company was postponed until 2019, the updates this year will be based on the improvement of the existin...

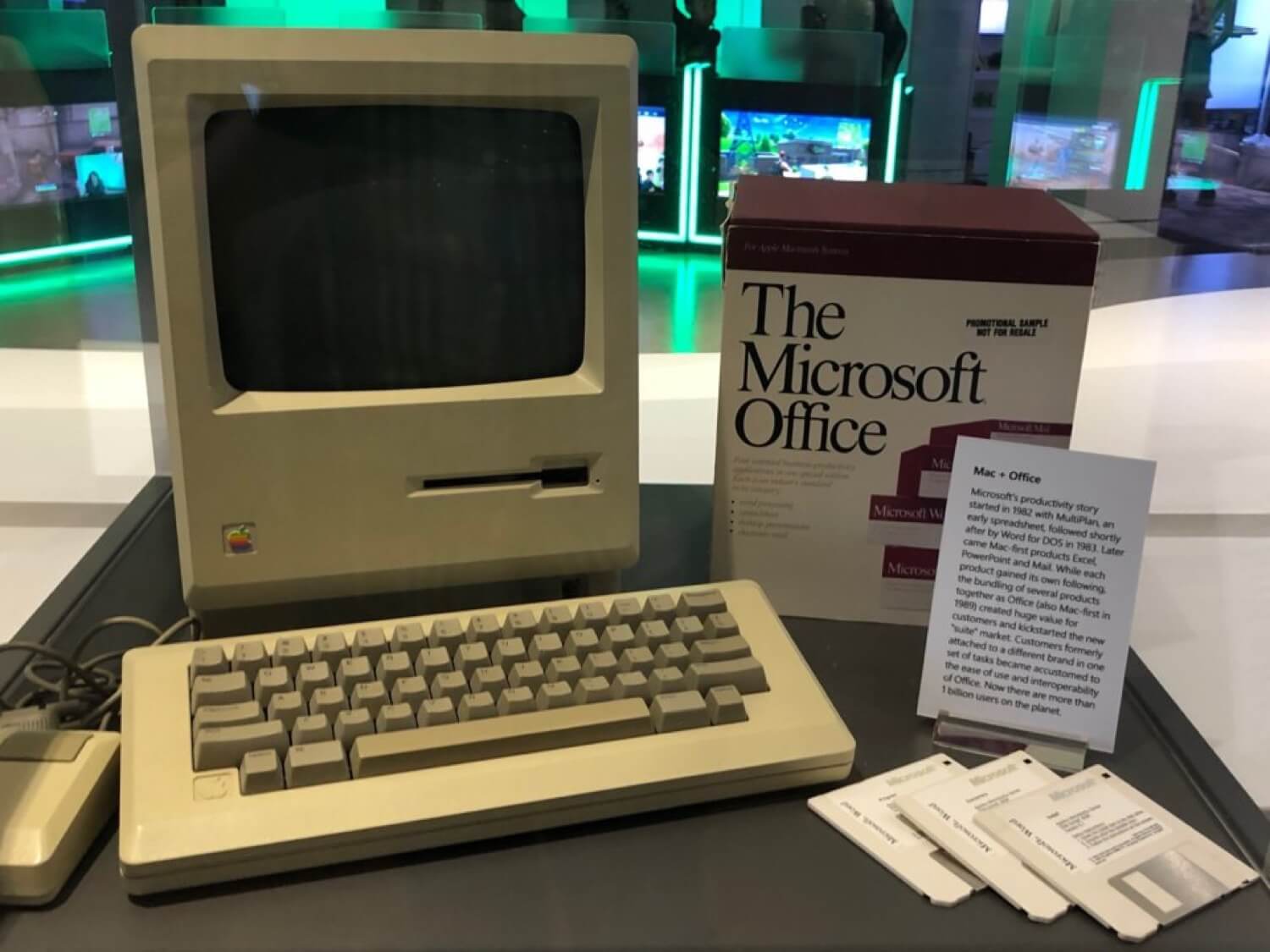

The story of the first Macintosh computer, which is the headquarters of Microsoft

Not so long ago the history of the first business card of bill gates and Paul Allen, which is stored in the exhibition hall of the headquarters of Microsoft are available to visit. In this room there's something else worthy of not...

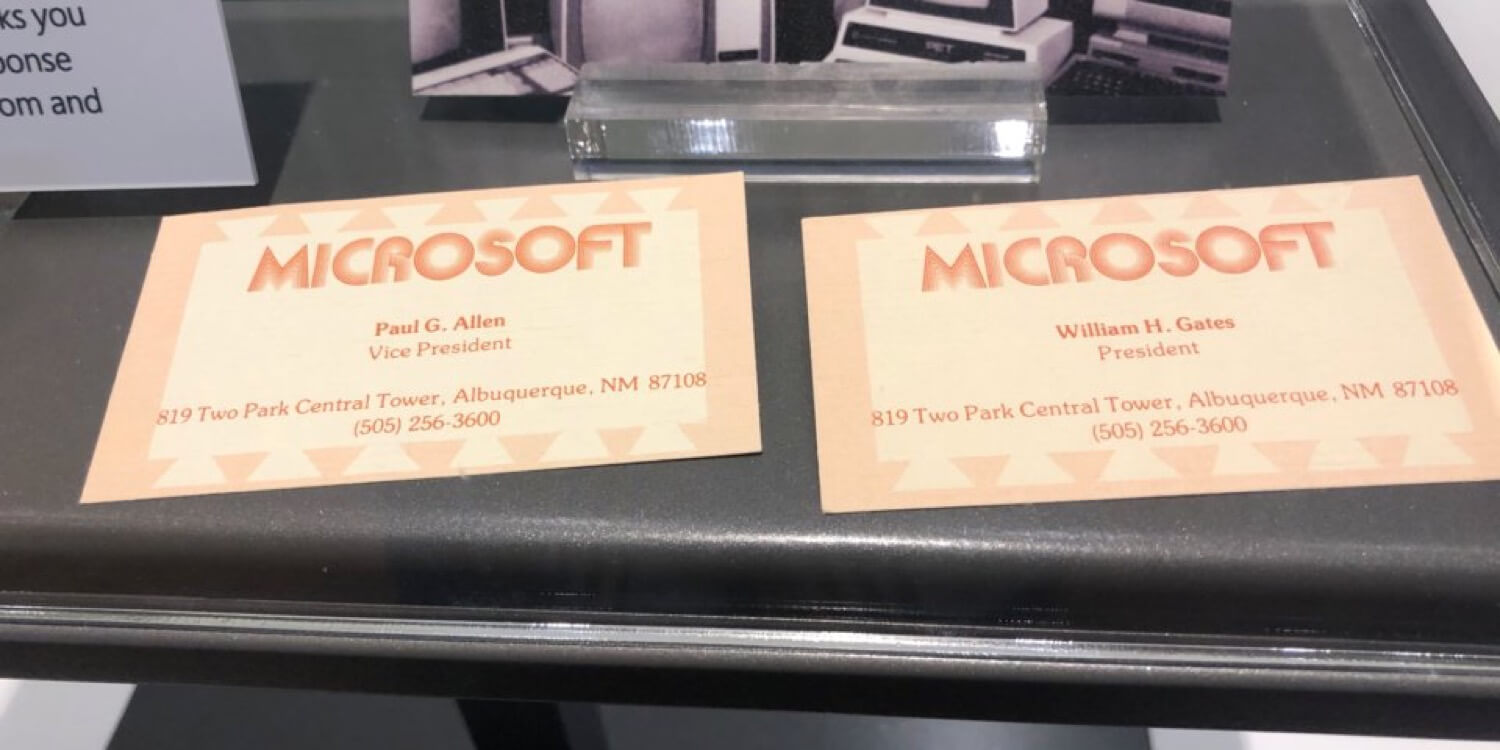

The story of the first business card of bill gates and Paul Allen

All with something started. Microsoft started with two friends who decided to write software for the microcomputer. They started a company and made myself business cards. Today, these business cards are stored in the Microsoft hea...

Users of computers began to threaten the publication of personal videos and browser history

computer Users around the world have started to receive e-mails from Scam to extort money. Depending on who sent the letter, its contents may change. However, colleagues from Business Insider was able to extract common features in...

7 laptops for those who want to buy the best

If you want to buy the most efficient laptop today, you need to look at the model with a premium design and materials, high-resolution display, processor and 16 gigabytes of RAM. In this case, you have the choice not too big. Here...

The hacker stole the files of the U.S. army, but could not sell them even for $ 150

the Hacker has guessed to use the vulnerability of routers to gain access to the files of the U.S. army. The obtained data he attempted to sell on the forum on the darknet, but was unable to find an interested buyer, even reducing...

Secret "pocket" Surface from Microsoft is with a folding screen

Microsoft is working on a mysterious new device from the Surface has at least a few years. The device, codenamed "Andromeda" was repeatedly flashed in patents, reports, references in the operating system, and should include design...

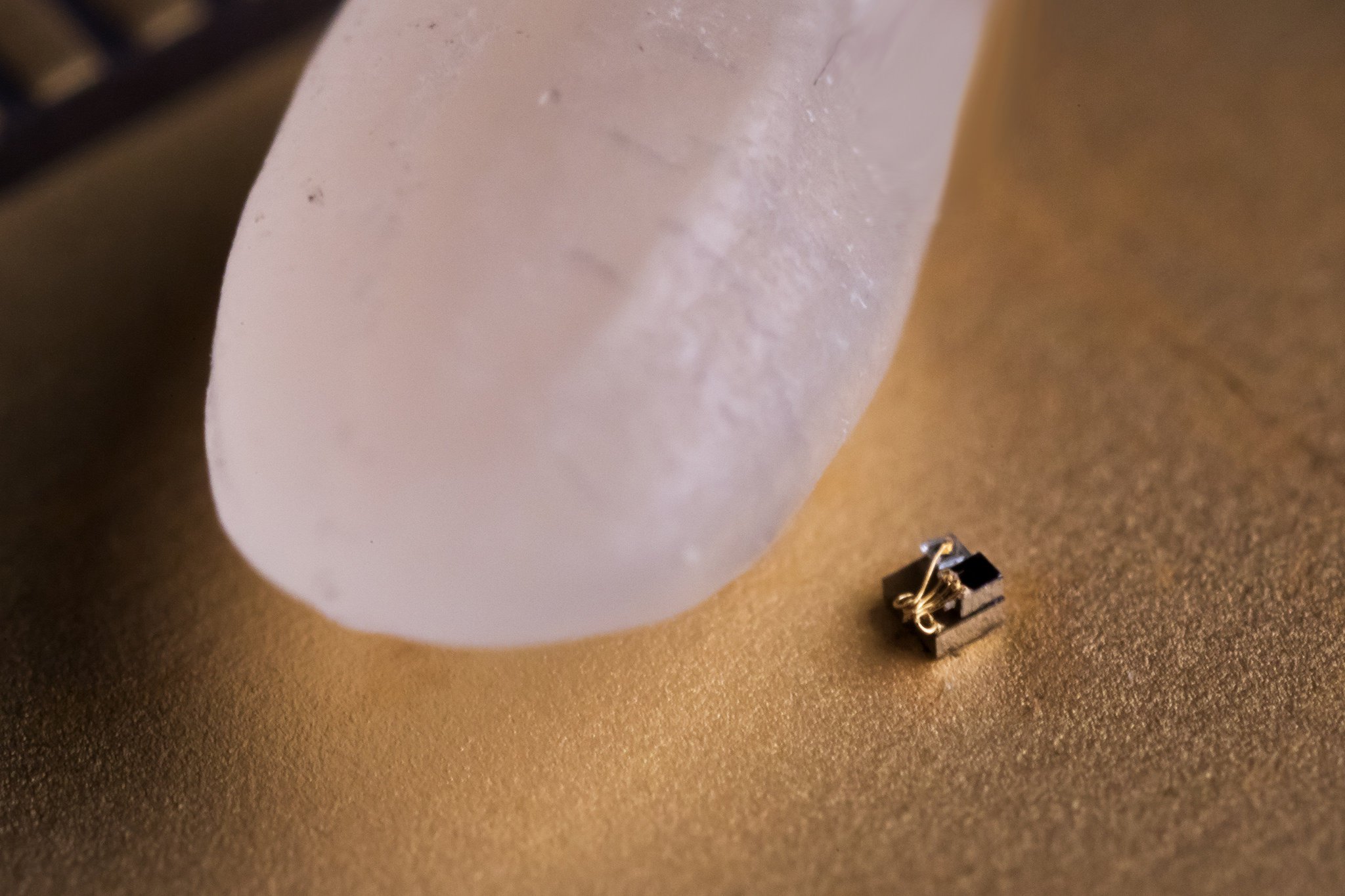

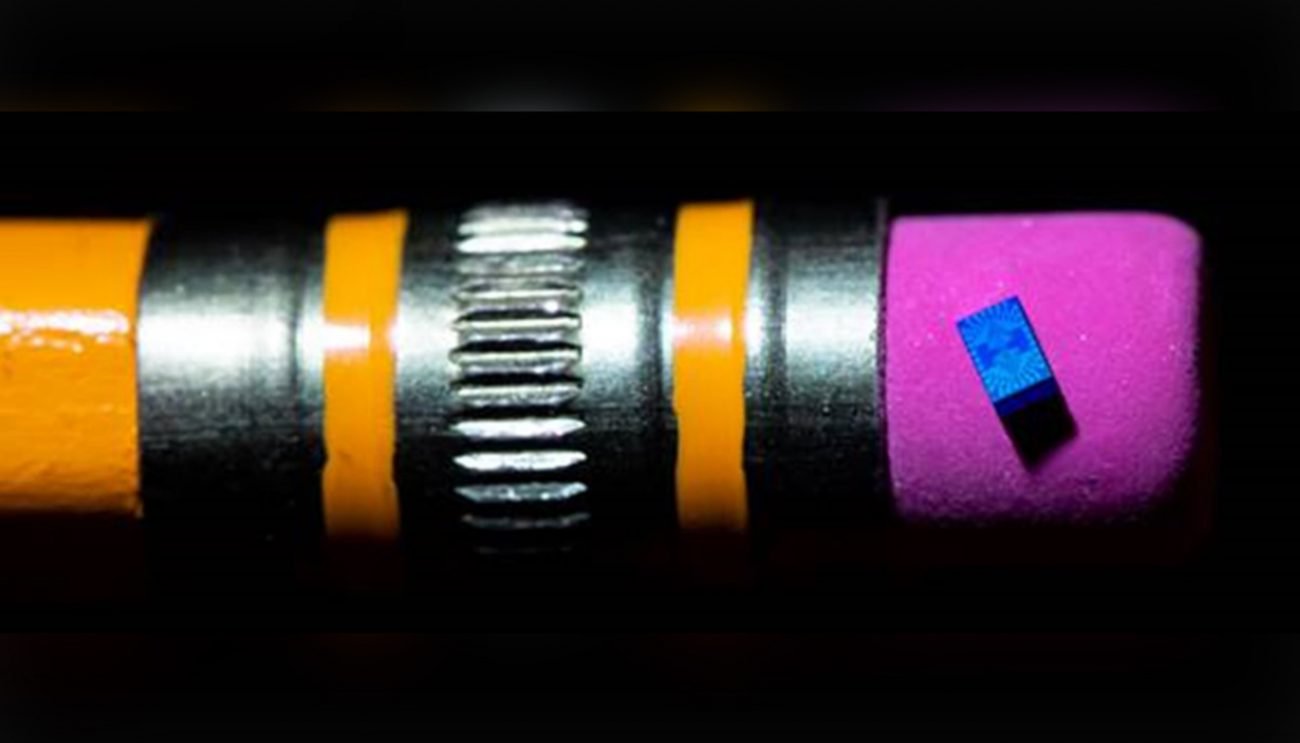

The smallest computer in the world has become even smaller

When IBM said in March that created the smallest computer in the world, scientists from the University of Michigan with displeasure raised eyebrows because IBM is the championship belonged to them. Now the smallest computer in the...

Intel has created the world's smallest quantum chip

Already practically does not remain doubts that in the future quantum computers, if not will replace the familiar to us computers, then certainly strongly they will press. The success in this sphere do have a company and recently,...

In the United States represented the most powerful supercomputer in the world

the US Department of energy introduced the world's most powerful supercomputer, selected the title from the Chinese Sunway TaihuLight. Peak performance supercomputer American Summit reached 200 petaflops. (200 quadrillion calculat...

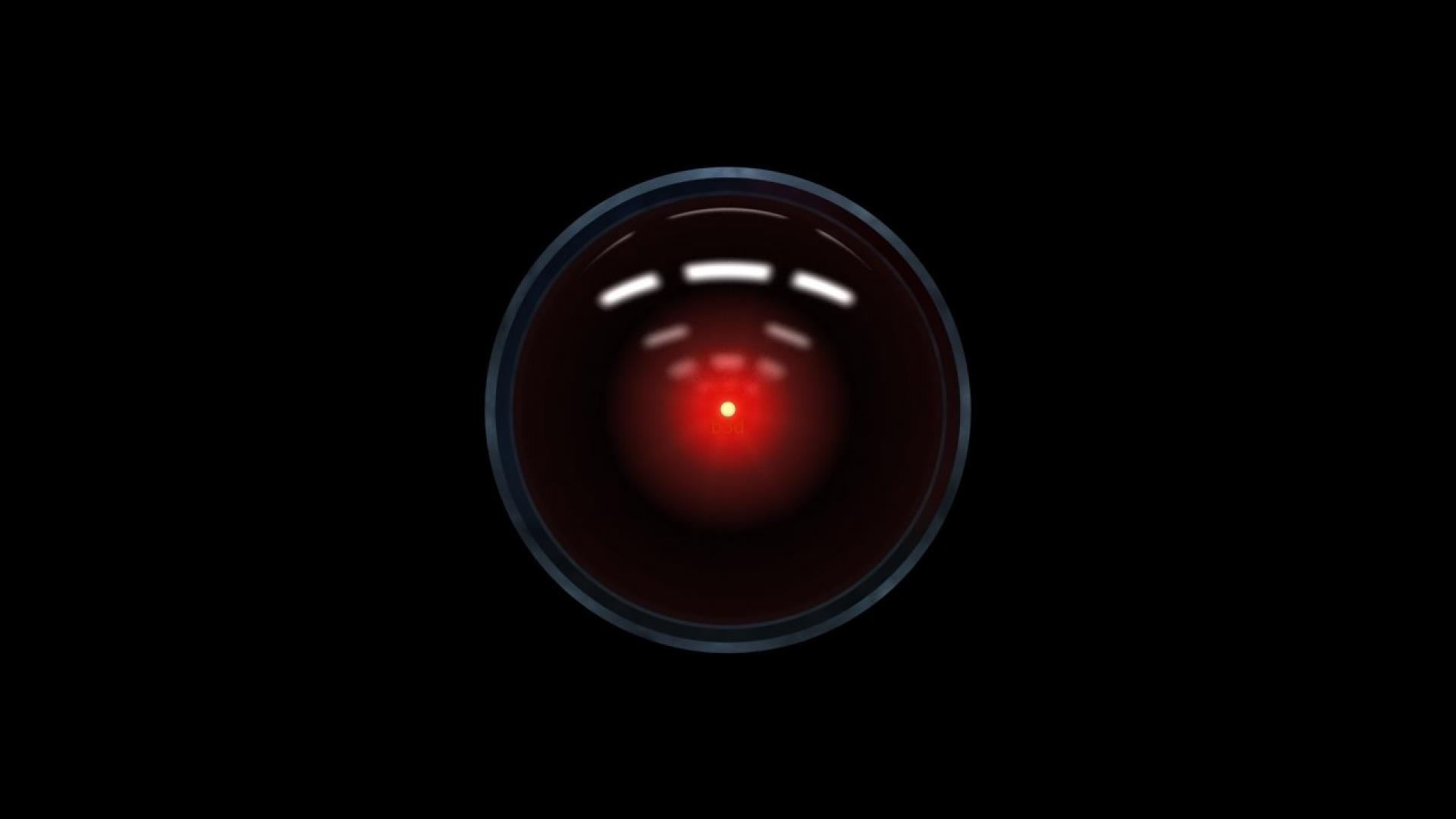

HAL 9000 will never appear: emotions are not programmed

HAL 9000 is one of the most famous cinematic artificial intelligence. This superior form of intelligent computer malfunctioned on the way to Jupiter in the iconic film, Stanley Kubrick's "Space Odyssey 2001", which is currently ce...

Developed a fundamentally new type of qubit for a quantum computer

an international group of scientists, consisting of Russian, British and German experts in the field of quantum technologies has created a revolutionary technology of qubits based on dzhozefsonovskikh the transition that represent...

Every Monday in the new issue of «News high-tech» we summarize the previous week, talking about some of the most important events, the key discoveries and inventions. This time we will focus on the toothbrush for mining,...

Comments (0)

This article has no comment, be the first!